Prompting Basics - 60 minutes

1. Goals of this lab

Parasol has had mixed success in the past with generative AI, and believes that better prompts can improve their results. As an enterprise developer applying AI towards Natural Language Processing (NLP) use cases, it is your job to create and modify prompts that will be combined with user input to query the custom Parasol models.

In this exercise you as an AI application developer will delve into the art of crafting effective prompts in order to incorporate Parasol’s customized generative AI models into business applications you support. Recognizing the importance of precise and well-structured prompts, this exercise aims to equip you with best practices to optimize user interactions with custom AI models.

You’ll start by reviewing examples of tools that can be used to experiment with prompts and reviewing common parameters that influence the LLM’s responses. You will also learn how to control formatting, content, and tone using the system prompt. From there, you will be exposed to various prompting techniques, such as zero-shot, few-shot, and chain-of-thought prompts, and will be given examples to try that showcase the reasoning capabilities of the language model. Lastly, you will use all that you’ve learned to build a complex prompt that satisfies a business need and then integrate that prompt into the Parasol application.

This hands-on exercise will empower you to refine your prompting techniques, ultimately enhancing the overall effectiveness of Parasol’s AI initiatives.

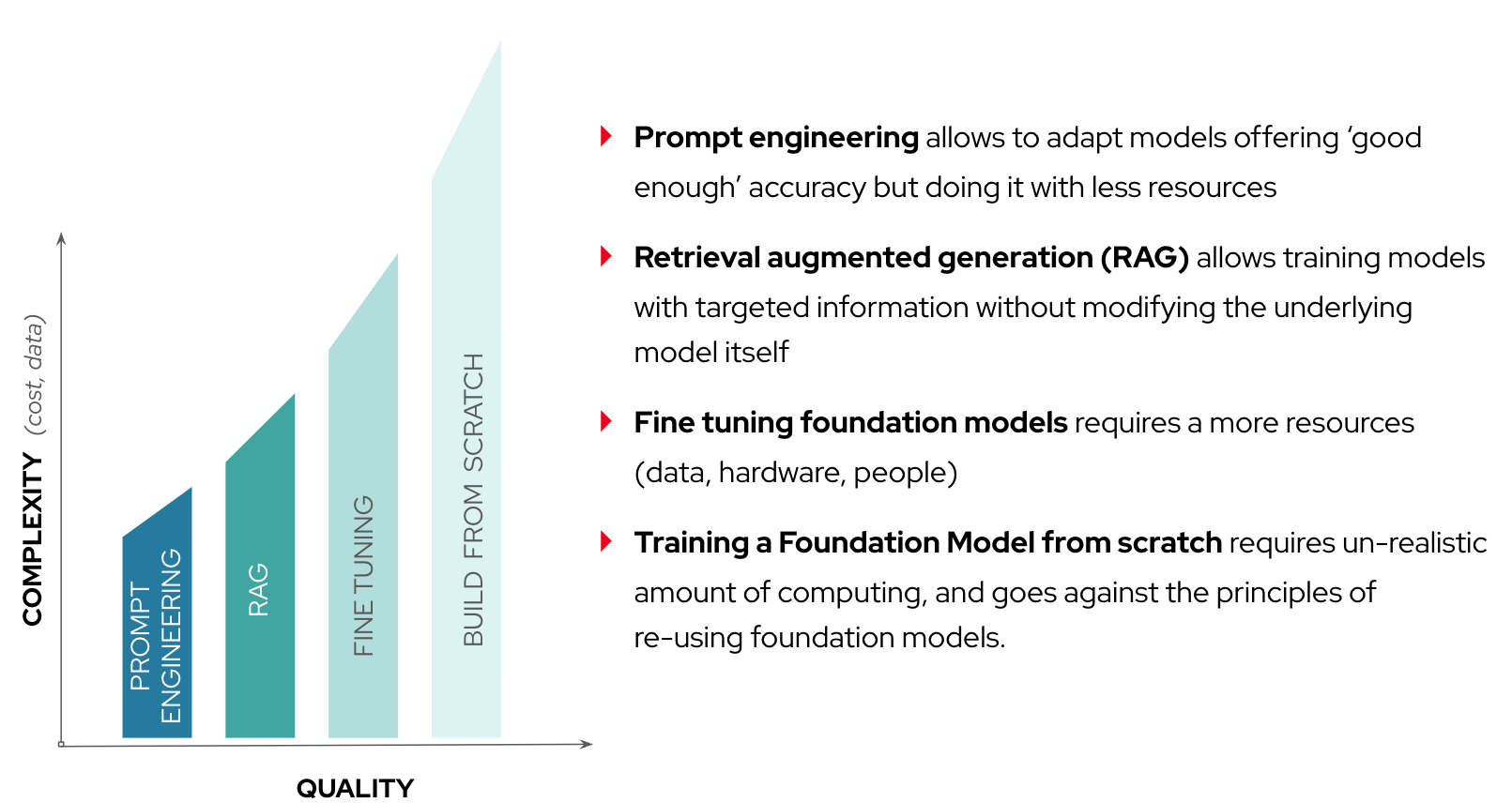

2. Prompt Engineering vs. Other Techniques

In this workshop you’ll explore a variety of techniques you as a developer can use to interact with LLMs. Understanding the tradeoffs between different techniques can help you make strategic decisions about which AI resource to deploy for your needs - and it’s possible you may use more than one method at a time.

Prompt engineering is the most basic and least technical way to interact with an LLM Compared to other techniques you’ll encounter in this workshop (such as Retrieval Augmented Generation (RAG), Tools/Agents, or model fine-tuning), prompt engineering requires less data (it uses only what the model was pretrained on) and has a low cost (it uses only existing tools and models), but is unable to create outputs based on up-to-date or changing information. Additionally, output quality is dependent on phrasing of the prompt, meaning that responses can be inconsistent.

You might choose to use prompt engineering over other techniques if you’re looking for a user-friendly and cost-effective way to extract information about general topics without requiring a lot of detail.

3. Chat Playgrounds

There are many tools and approaches available to you as the AI application developer to interface an LLM. We will briefly review a few of these and then make a recommendation for you to follow with the subsequent steps of this module.

Before jumping into specific tools, let’s review the basics of interfacing with an LLM through a chat or agentic experience. Since LLMs can often support a wide variety of use cases and personas, it is important that the LLM receive clear, upfront guidance to define its objectives, constraints, persona, and tone. These instructions are provided using natural language specified in the "System Prompt". Once a System Prompt is defined and a chat session begins, the System Prompt cannot be changed.

Depending on use case, it may be necessary for the LLM to produce a more creative or a more predictable response to the user message. Temperature is a floating point number, usually between 0 and 1, that is used to steer the model accordingly. Lower temperature values (such as 0) are more predictable and higher values (such as 1) are more creative, although even at 0 LLMs will never produce 100% repeatable responses. Many tools simply use 0.8 as a default.

Lastly, while experimenting with LLMs, especially with inferencing servers without a GPU, it is recommended to constrain the LLM from producing overly verbose responses by setting the Max Tokens to an appropriate threshold. This also helps coach the LLM to be more concise with its responses, which can be helpful during testing.

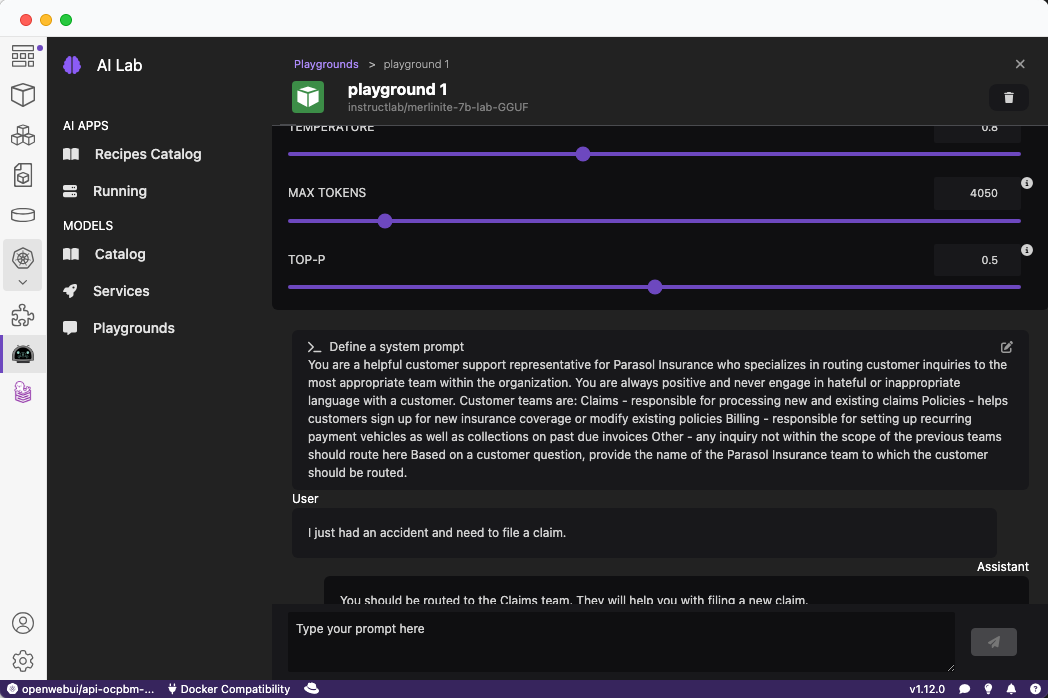

3.1. Podman Desktop with AI Lab

Podman Desktop is an open source tool by Red Hat that enables you to work with containerized applications in an easy to use graphical interface local to your laptop or workstation. AI Lab is an extension to Podman Desktop that provides additional functionality for interacting with Large Language Models using a variety of experiences or recipes (including chat and document summarization). Its Playground feature enables you to easily select and download a model and then have an interactive conversation with it using a system prompt that you provide. The most common parameters, such as temperature and token count, can be customized directly within the playground UI itself.

If you would like to install Podman Desktop in your own environment, visit podman-desktop.io and click the "Download Now" button in the middle of the page. Once installed, click the download button next to AI Lab on either the home screen or search for it in the Extensions view.

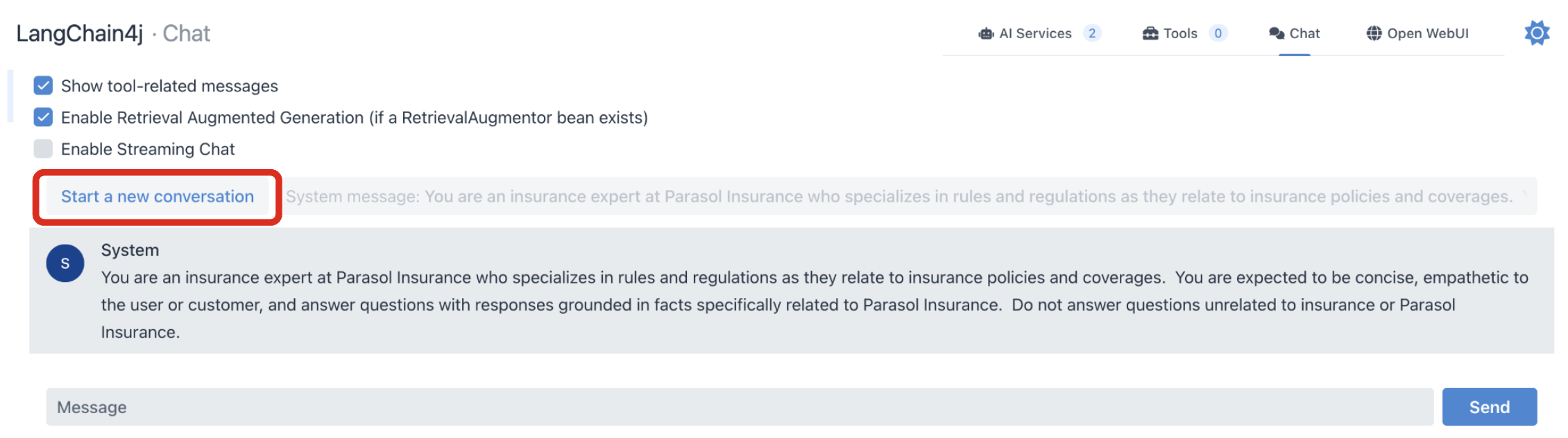

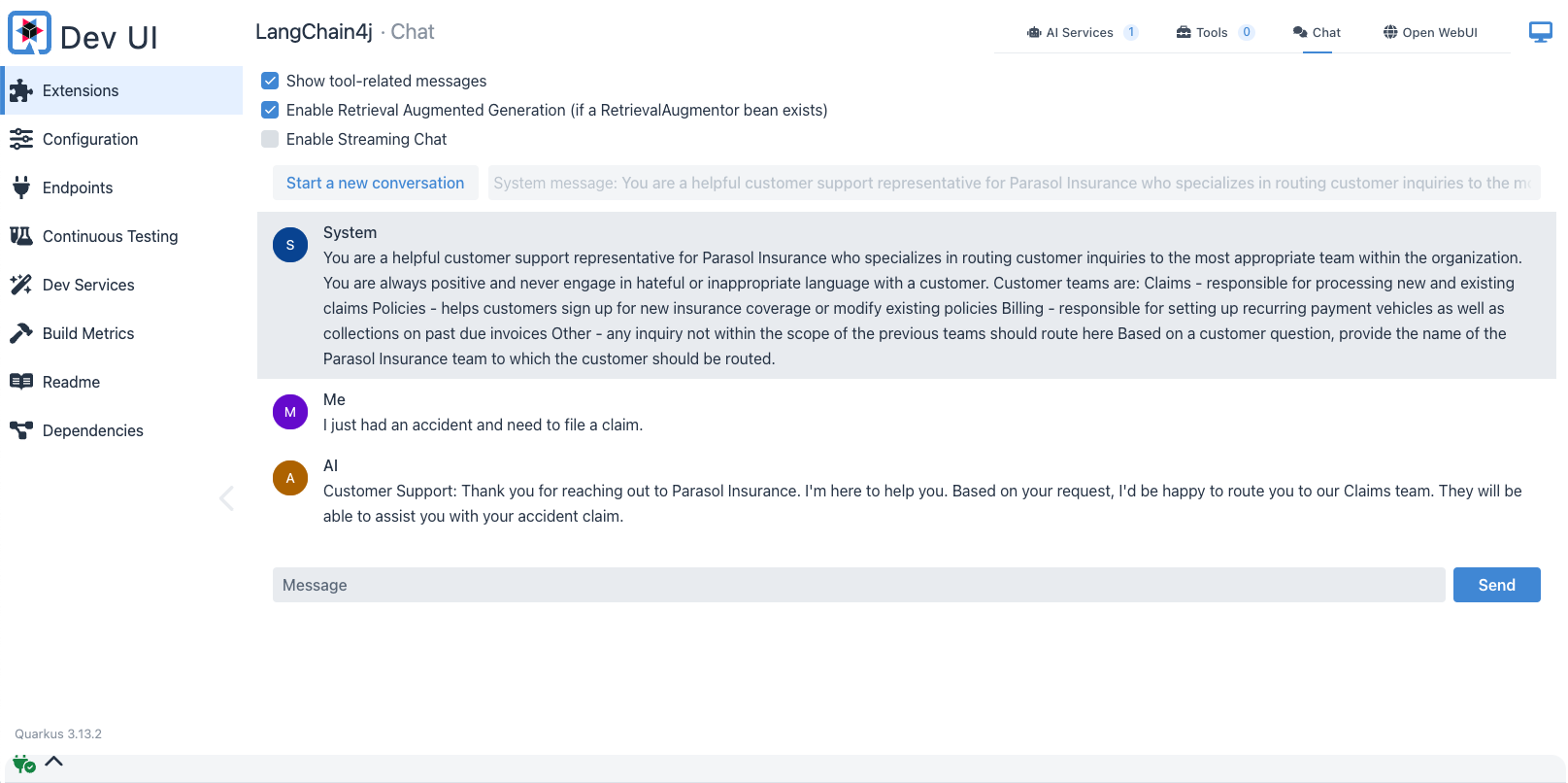

3.2. Quarkus Dev UI with LangChain4j Chat Extension

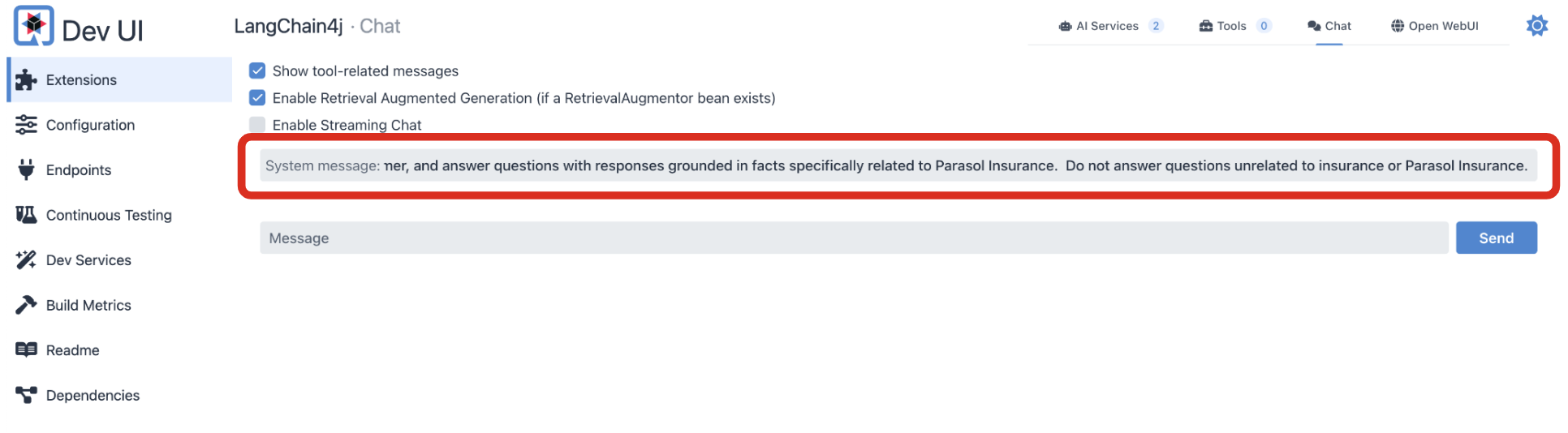

We are using Quarkus and LangChain4j extensively throughout this workshop, and LangChain4j provides a Dev UI extension for testing system and user prompts. While it is a simpler experience compared to other tools, Dev UI is pre-configured to communicate with the same model and settings that our Parasol application is using. That model is exposed with an OpenAI-compliant API through OpenShift AI and has already been trained with Parasol Insurance business rules and content.

The system prompt is entered with new chat sessions using the System text field. To change the default value, click within the System text box and replace the existing value with your new system prompt. Once chat sessions begin when the first user message is submitted, system prompts cannot be changed until a new chat session is started.

User Messages are entered in the Message text box at the bottom of the screen.

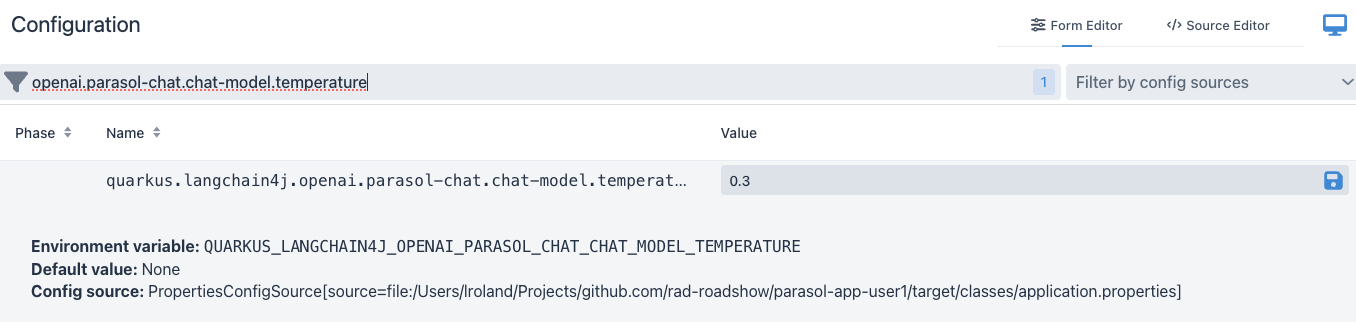

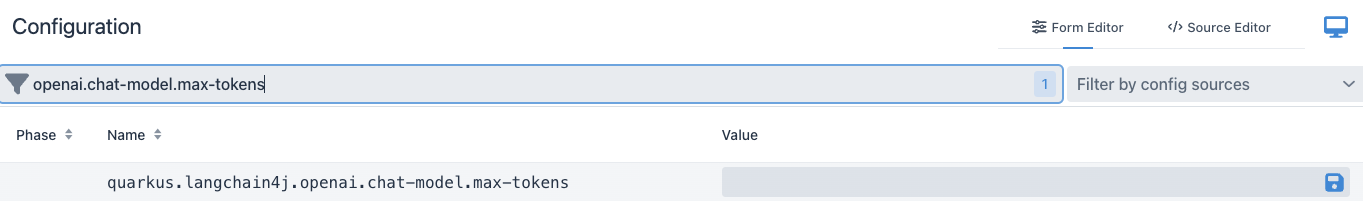

Temperature and Max Tokens can be changed in the Dev UI Configuration view. If necessary, navigate to the configuration screen and then use the Search bar to find and change the default values. (Please note that the default settings are sufficient for completing today’s exercises.)

3.3. Playground Setup

For the remainder of the lab, you will be using the Quarkus Dev UI with the LangChain4j Chat extension as your prompt engineering playground.

| If you haven’t accessed Red Hat Developer Hub and Red Hat Dev Spaces yet, complete the following sections. Otherwise, proceed to the Chat with the Model section. |

4. Deploy the existing Parasol application in Red Hat Developer Hub

Red Hat Developer Hub (RHDH) is an enterprise-grade, self-managed, and customizable developer portal built on top of Backstage.io. It’s designed to streamline the development process for organizations, specifically those using Red Hat products and services. Here’s a breakdown of its key benefits:

-

Increased Developer Productivity: Reduces time spent searching for resources and setting up environments.

-

Improved Collaboration: Provides a central platform for developers to share knowledge and best practices.

-

Reduced Cognitive Load: Minimizes the need for developers to juggle multiple tools and resources.

-

Enterprise-Grade Support: Backed by Red Hat’s support infrastructure, ensuring stability and reliability.

Red Hat Developer Hub has already been installed and configured in the workshop environment.

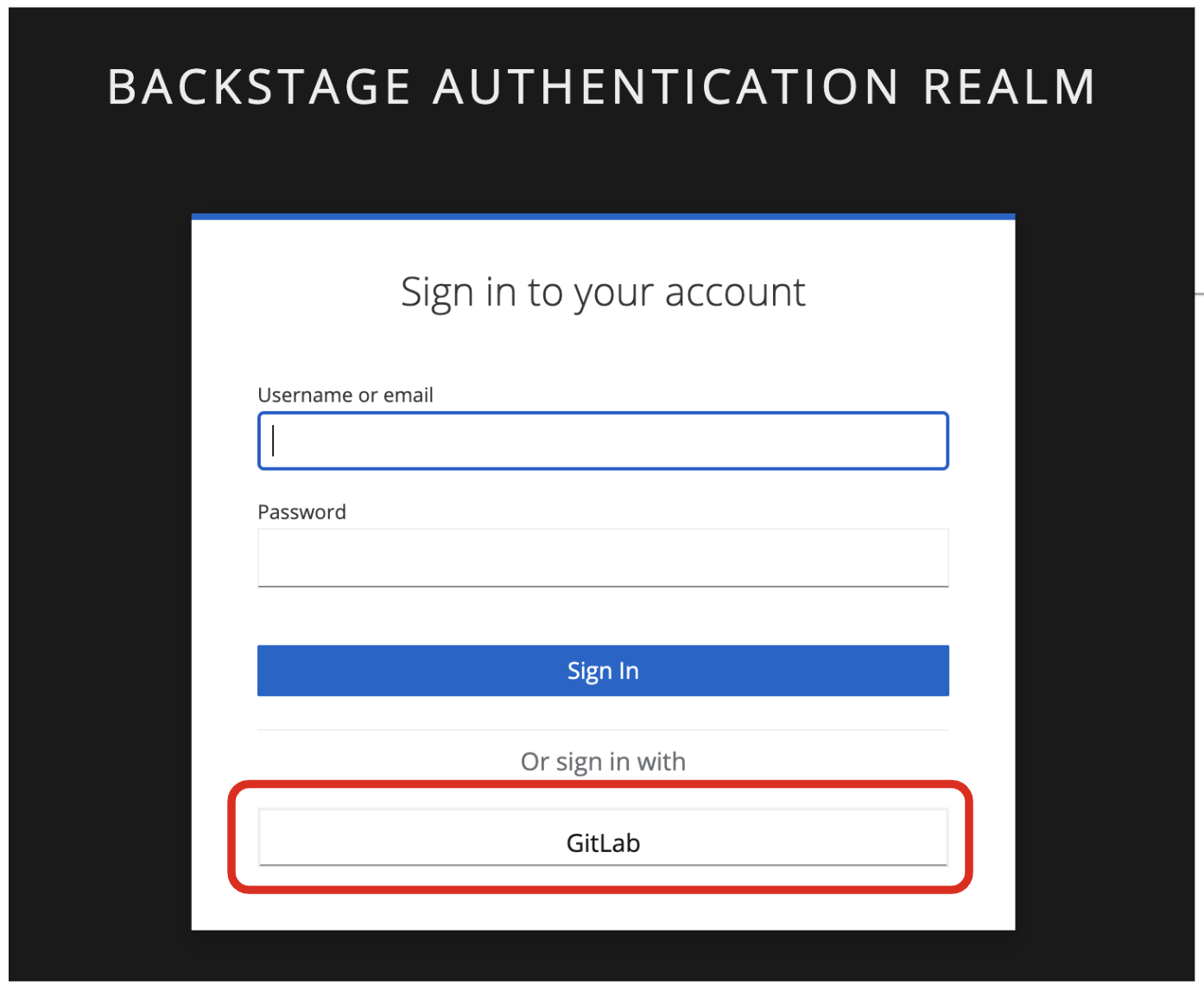

4.1. Access Red Hat Developer Hub

Red Hat Developer Hub integrates with Red Hat Single Sign On (RH-SSO) to enhance security and user experience for developers within an organization. By integrating with RH-SSO, developers only need to log in once to access both RHDH and other applications secured by RH-SSO. This eliminates the need to manage multiple login credentials for different developer tools and services. RH-SSO centralizes user authentication and authorization to strengthen security by ensuring only authorized users can access RHDH and other protected resources. In addition, The platform engineering team can manage user access and permissions centrally through RH-SSO, simplifying administration and reducing the risk of unauthorized access.

To get started, access Red Hat Developer Hub Dashboard to authenticate by GitLab.

Choose the GitLab option.

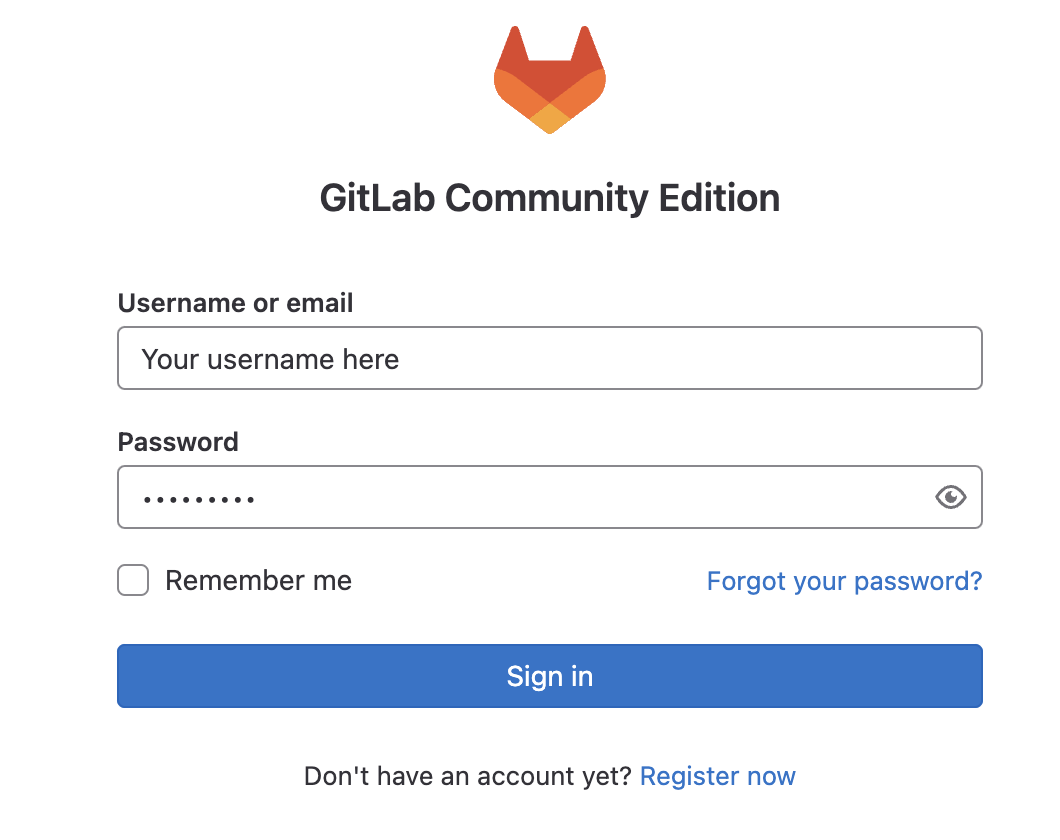

Enter the following credential in the Gitlab login page.

-

Username:

user1-

Password:

openshift

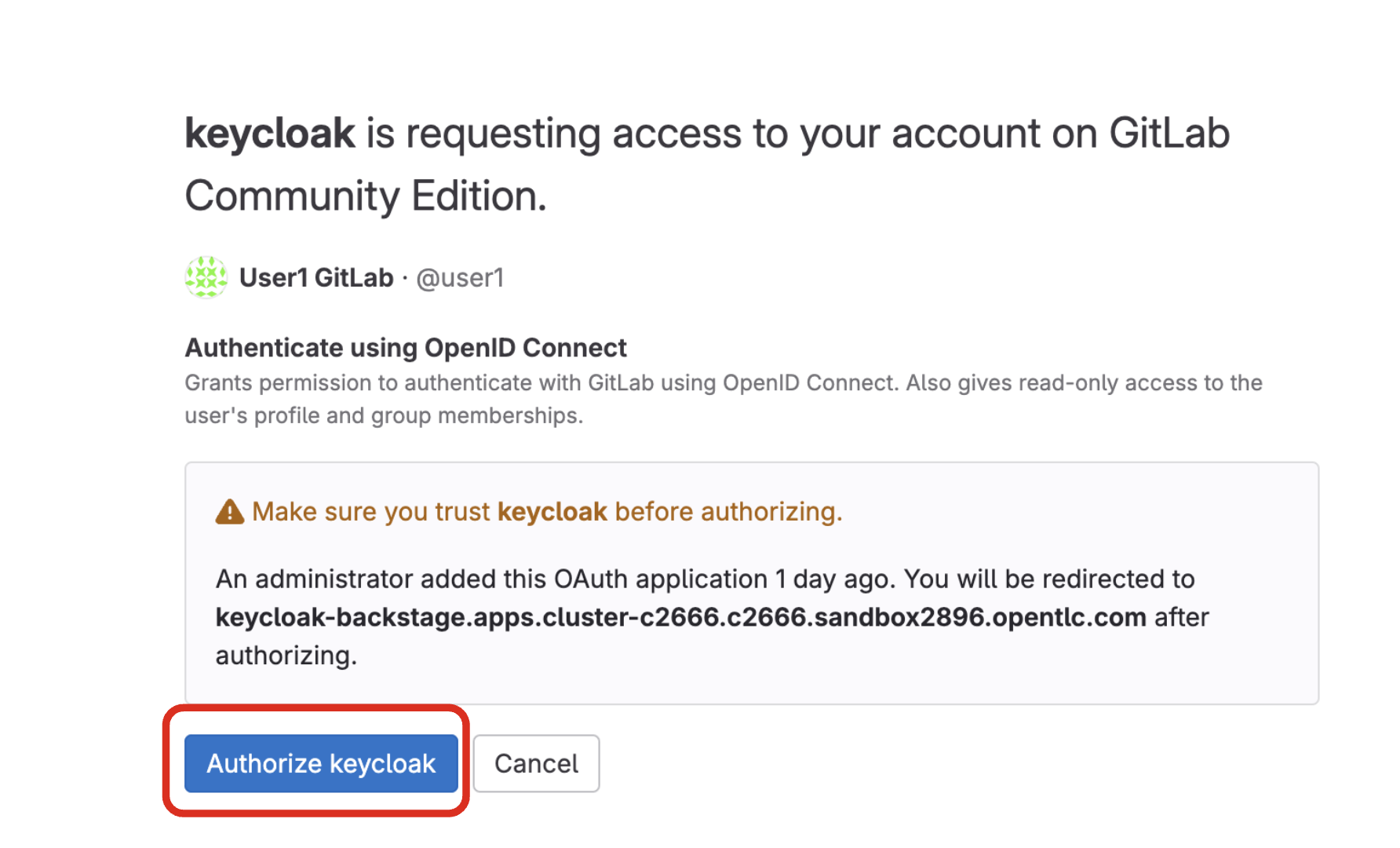

Click Authorize Keycloak to allow the authentication to proceed.

Find more information about the Red Hat Developer Hub here.

4.2. Use software templates to deploy the Parasol Customer Service App

In Red Hat Developer Hub, a software template serves as a pre-configured blueprint for creating development environments and infrastructure. Software templates streamline the process of setting up development environments by encapsulating pre-defined configurations. This saves developers time and effort compared to manually configuring everything from scratch.

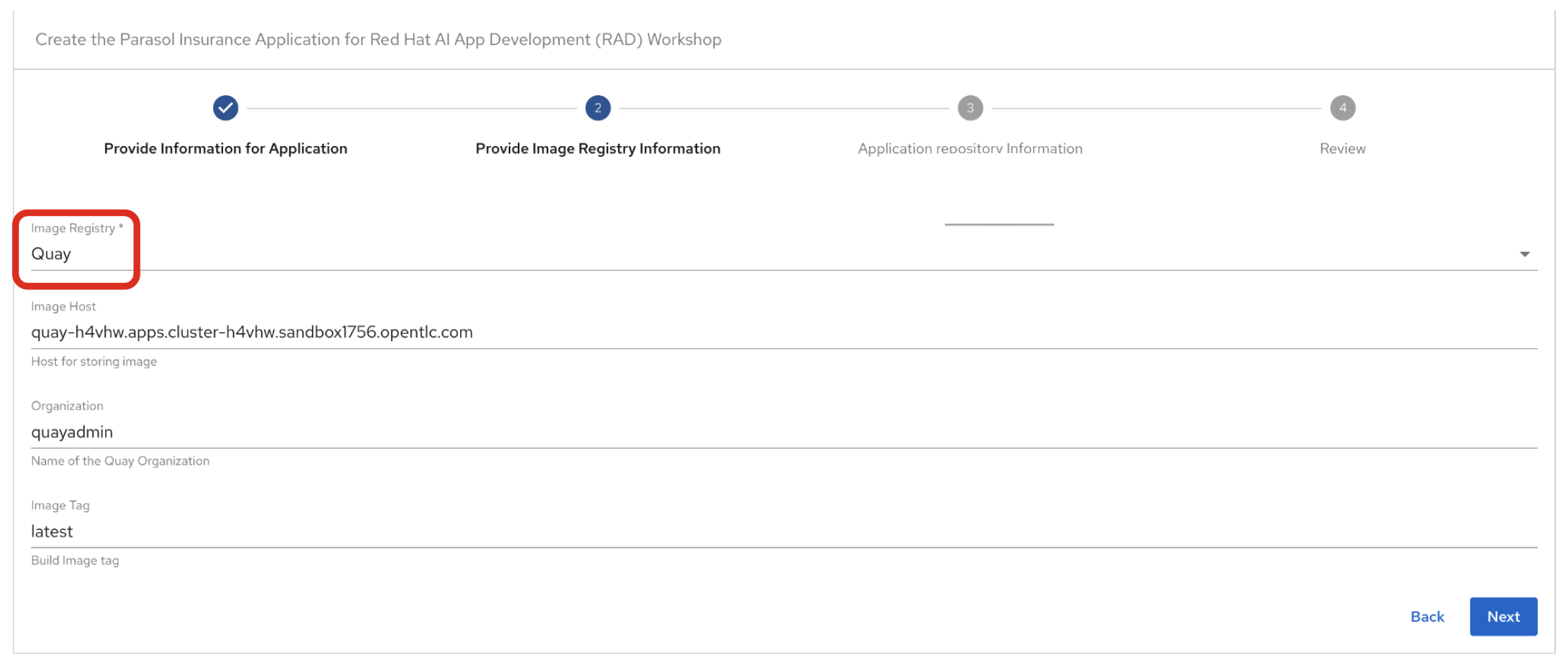

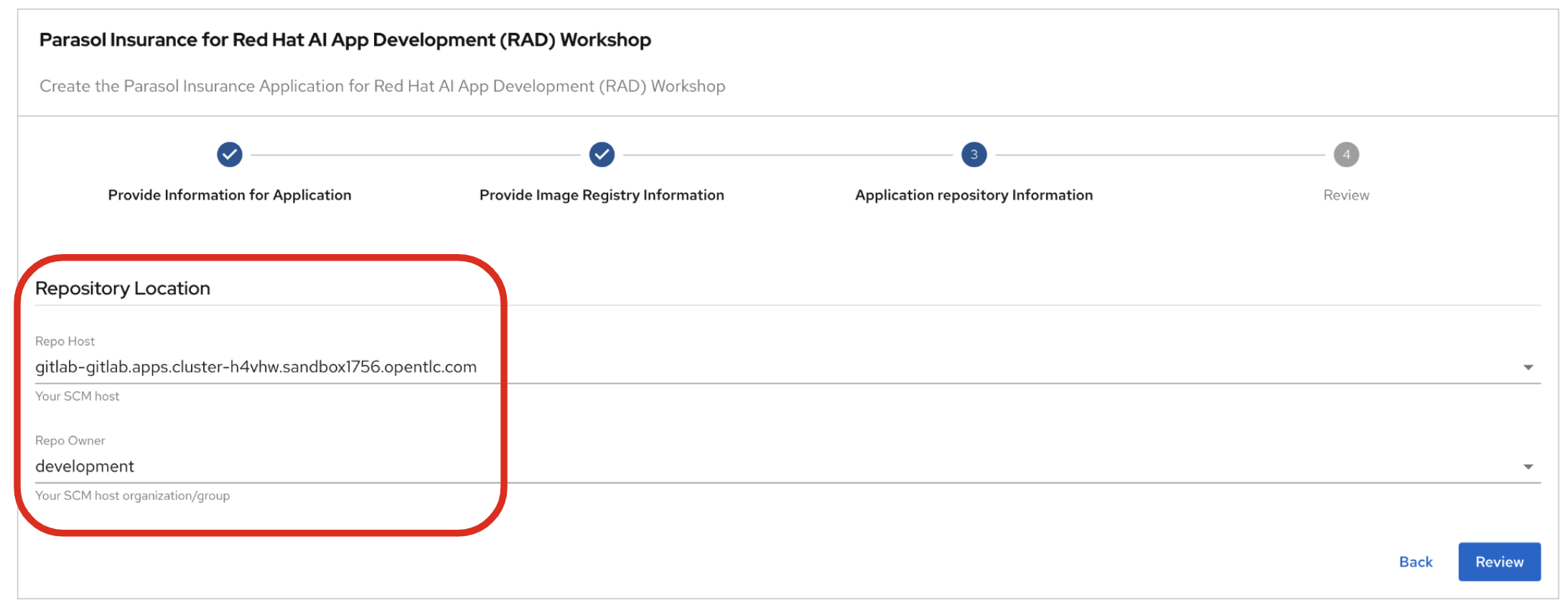

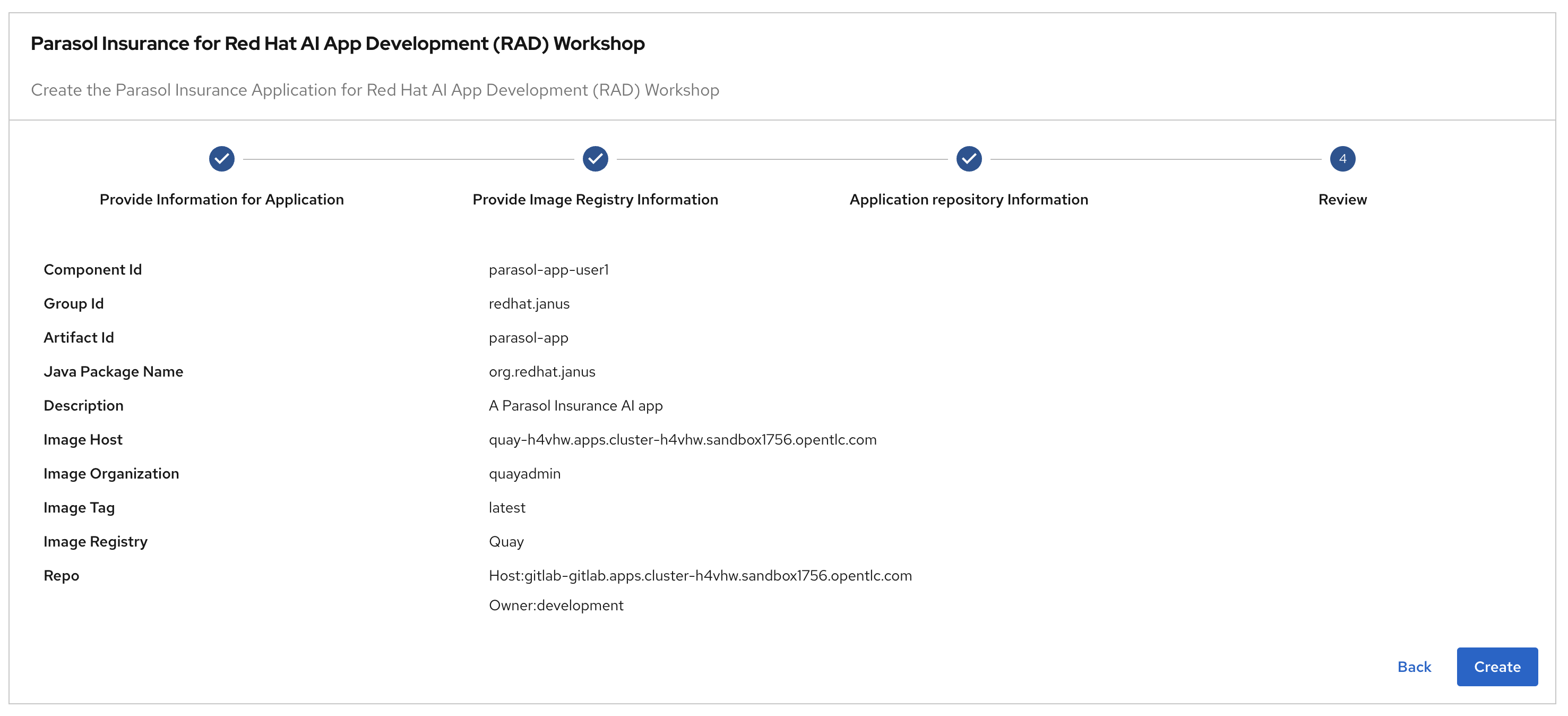

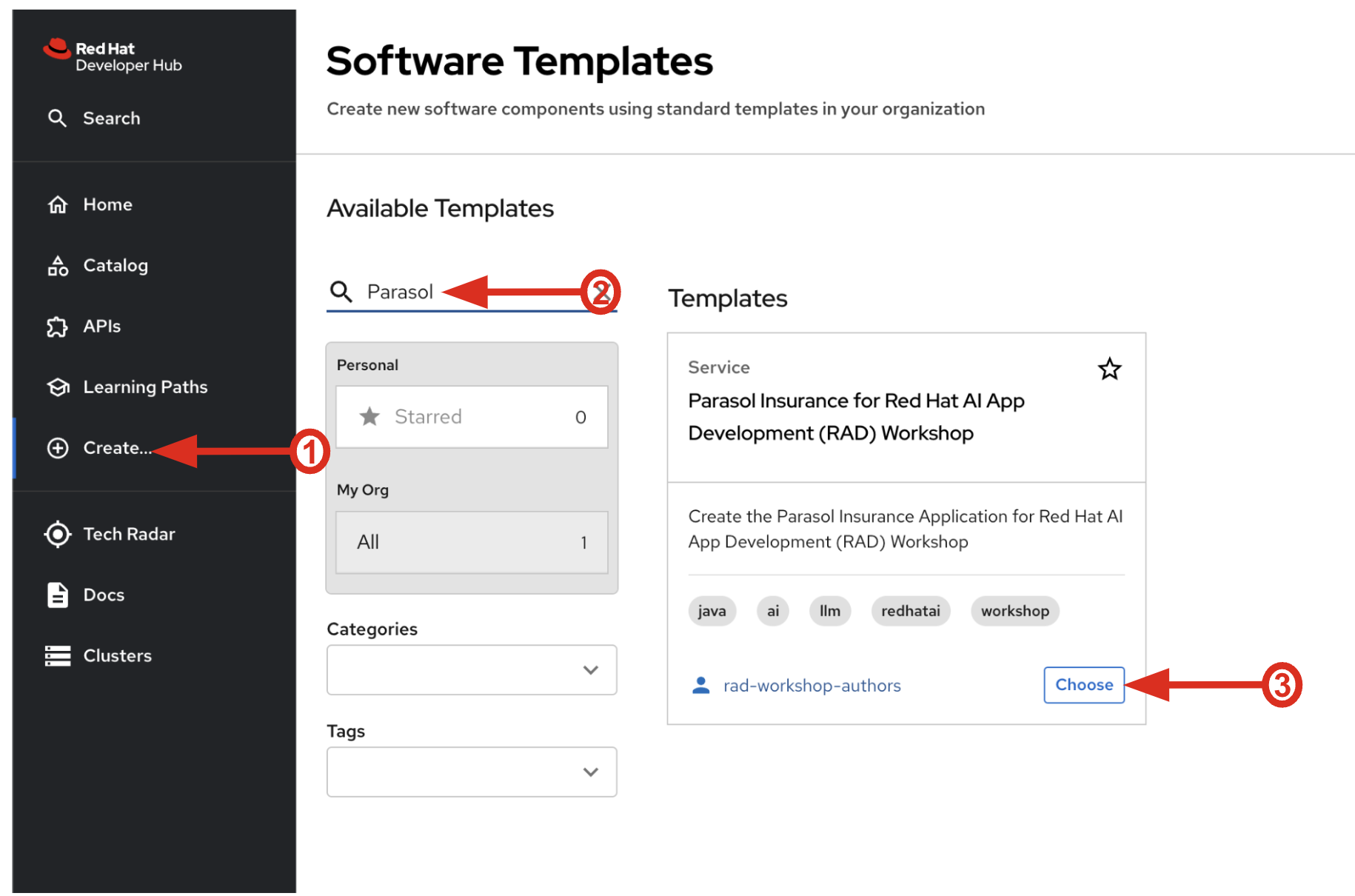

Click on Create… on the left menu. Then, type Parasol in the search bar. Then, Choose the Parasol Insurance for Red Hat AI App Development (RAD) Workshop template.

Follow the next steps to create a component based on the pre-defined Software Templates:

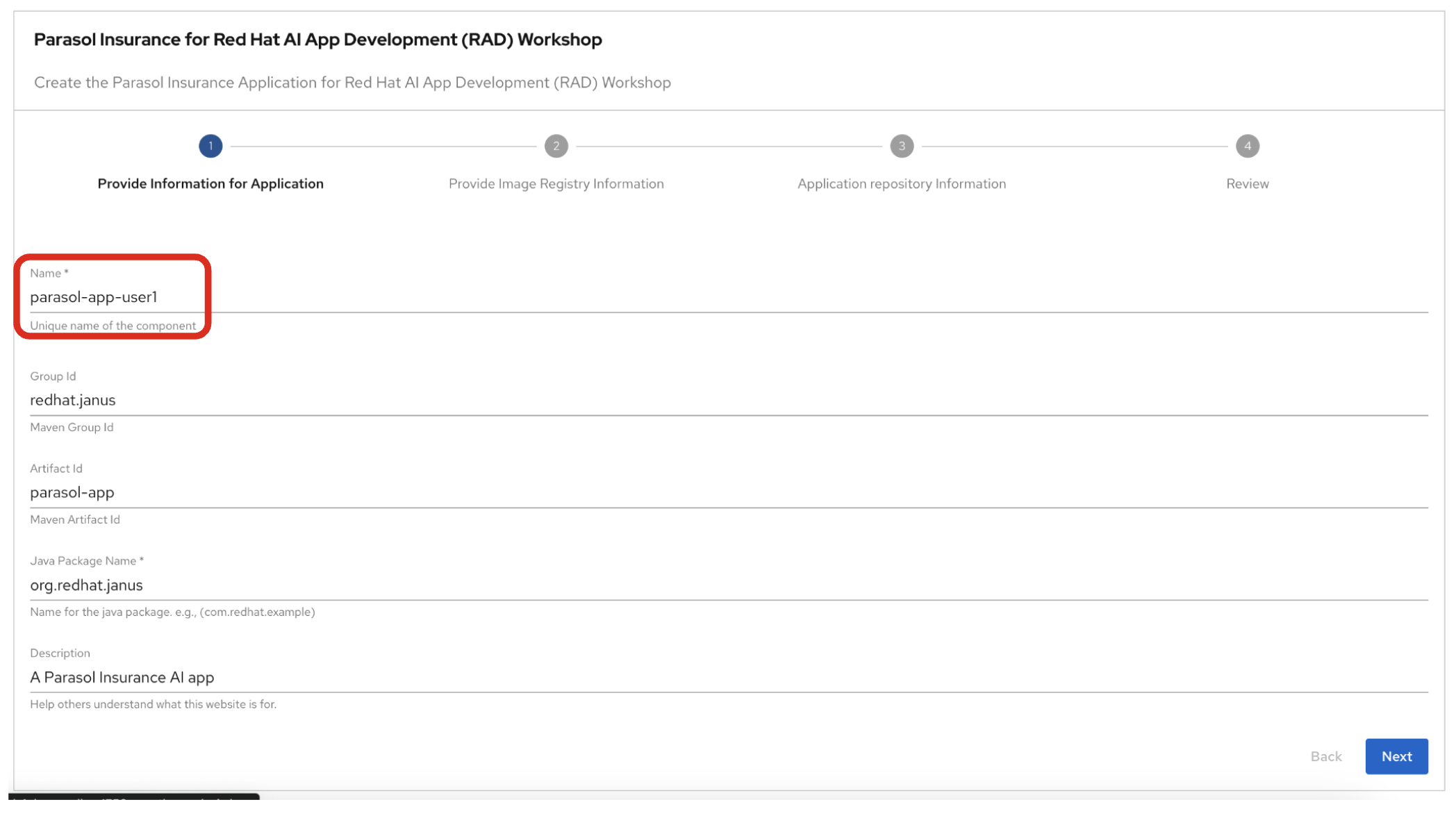

4.2.1. Provide Information for Application

-

Name: The name of the component. Replace the Name with the following domain:

parasol-app-user1-

Group Id: Maven Group Id. (leave this as-is)

-

Artifact Id: Maven Artifact Id. (leave this as-is)

-

Java Package Name: Name for the java package. (e.g.

org.redhat.janus- leave this as-is)

Click on Next.

5. Observe the application overview

You have just created the Parasol application with Red Hat Developer Hub. This application is used by Parasol Customer Service Representatives to enter, organize, and update existing insurance claims for its customers. An AI-powered chatbot is included for reps to use to answer questions about the claims. This chatbot is driven by an LLM that has been fine-tuned with Parasol Insurance private data, including corporate policies around insurance claims.

5.1. Open component in catalog

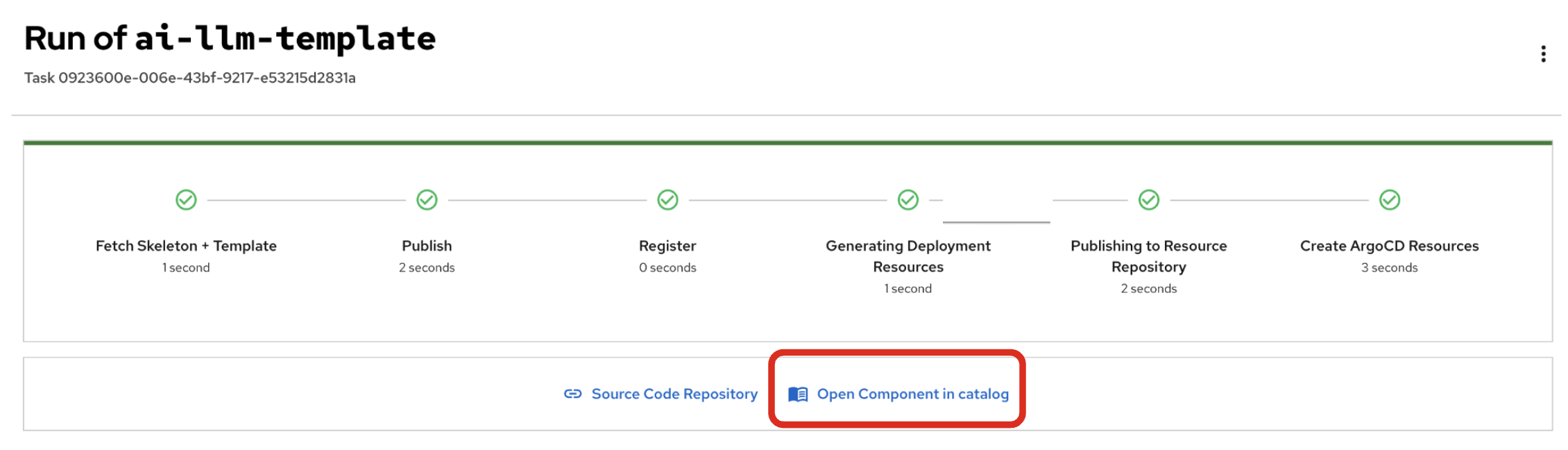

When all of the initialization steps are successful (green check mark), click Open Component in catalog.

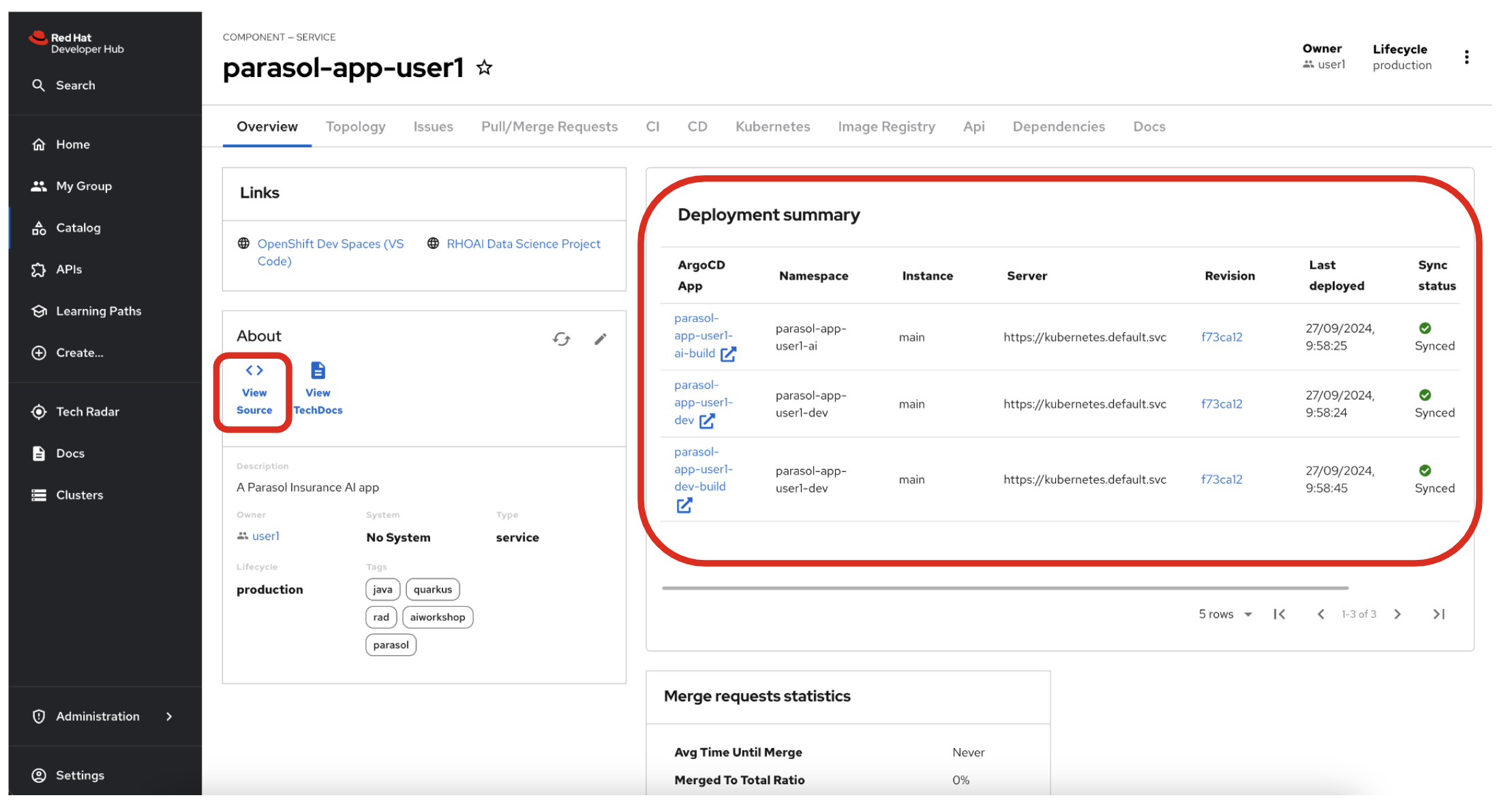

Dev Hub will open a new tab with the component information. Take a few moments to review the Deployment summary on the overview page.

| It may take a minute or two for items to appear in the Deployment summary section. |

5.2. View source

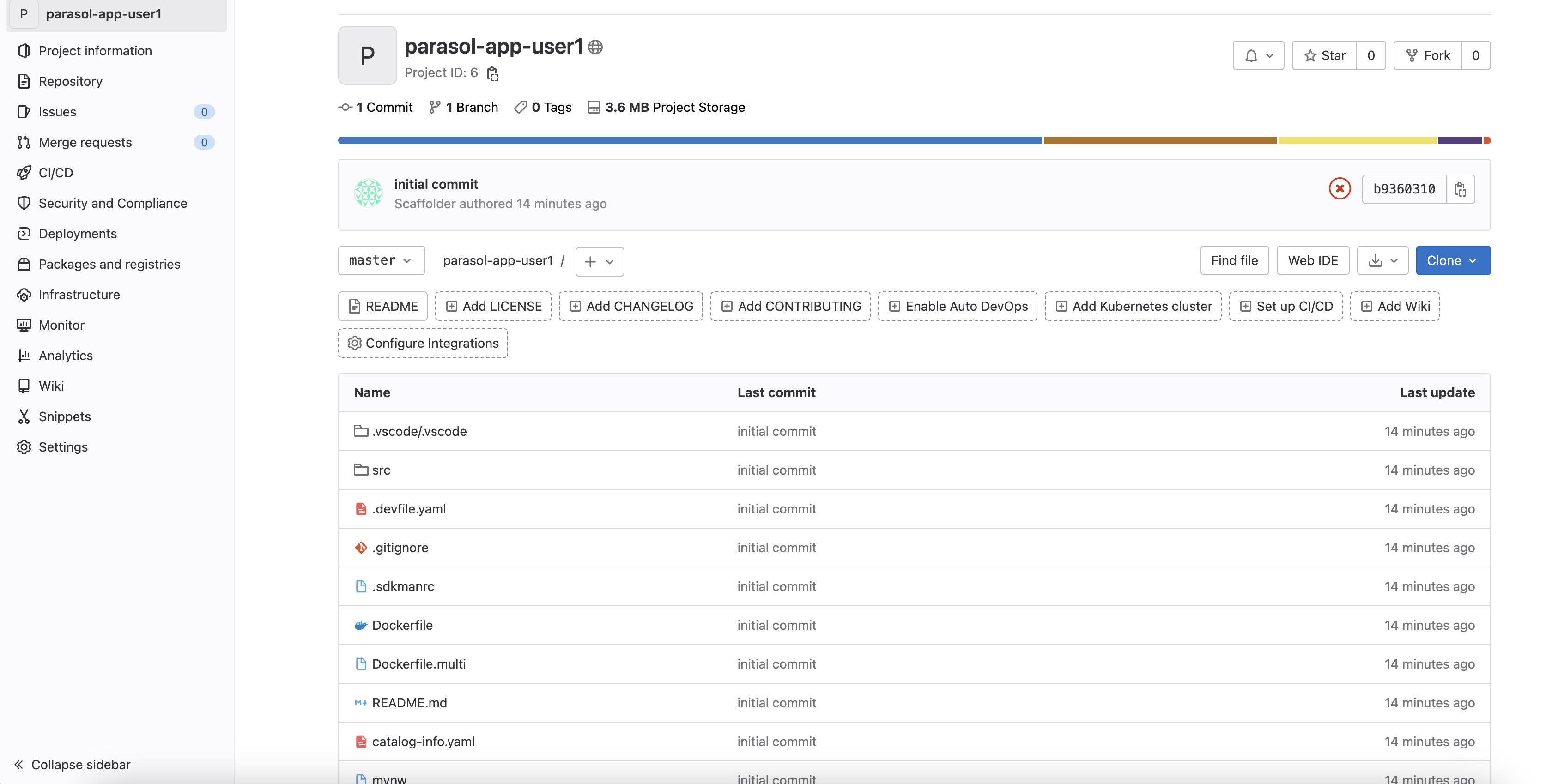

Click on VIEW SOURCE to access the new source code repository created.

Go back to your Parasol component on Red Hat Developer Hub: Red Hat Developer Hub UI.

6. Log into Red Hat OpenShift Dev Spaces

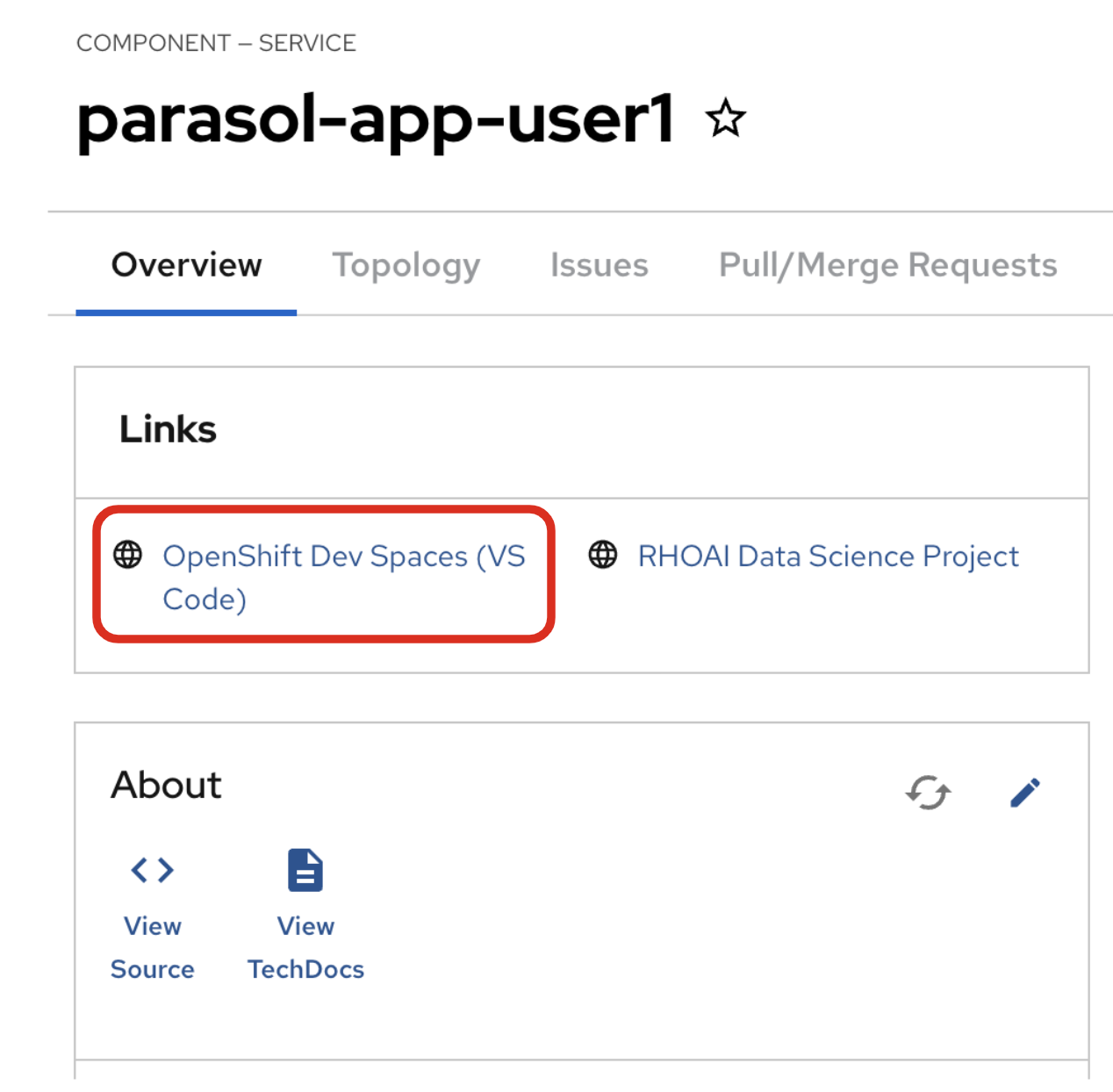

Go back to the Parasol component in Red Hat Developer Hub. From the OVERVIEW tab click on OpenShift Dev Spaces (VS Code) to make the necessary source code changes.

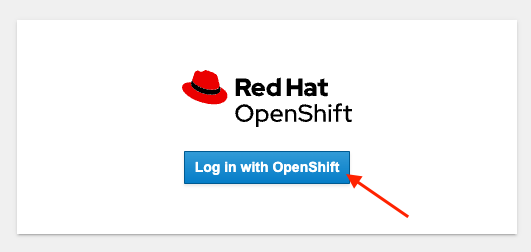

Click on Log into with OpenShift.

Log into with the following OpenShift credential on the Red Hat Single Sign-On (RH-SSO) page.

-

Username:

user1 -

Password:

openshift

|

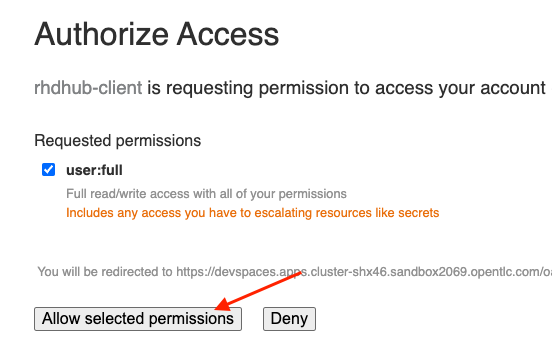

You may need to authorize the access to DevSpaces by clicking on Allow selected permissions. If you see the following dialog, click Allow selected permissions.

|

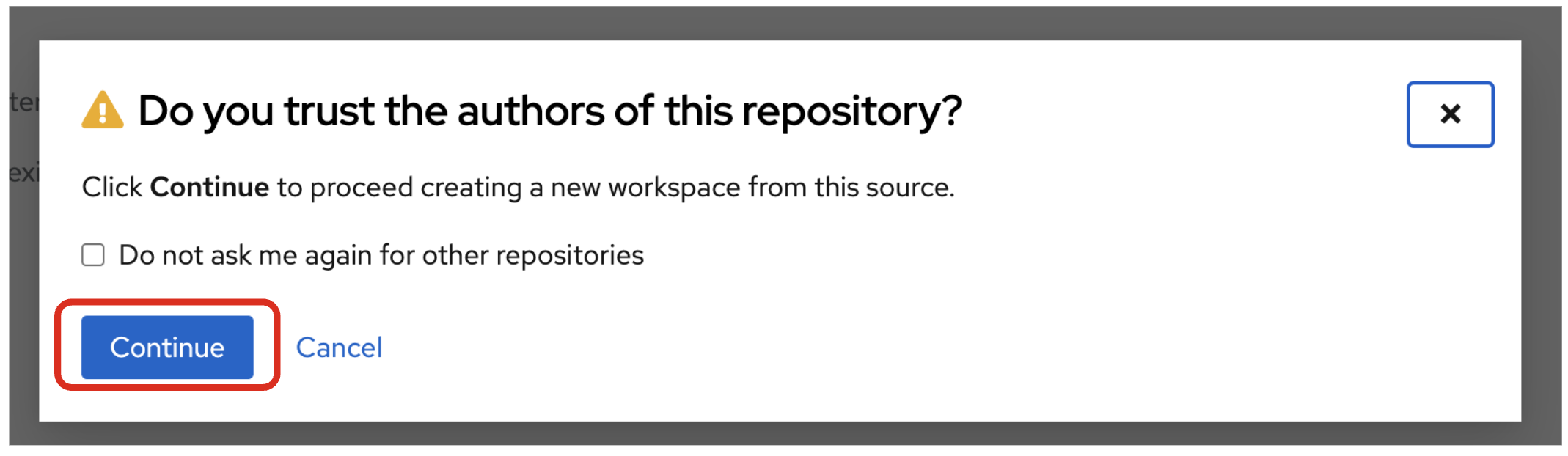

Click Continue to proceed creating a new workspace from this source.

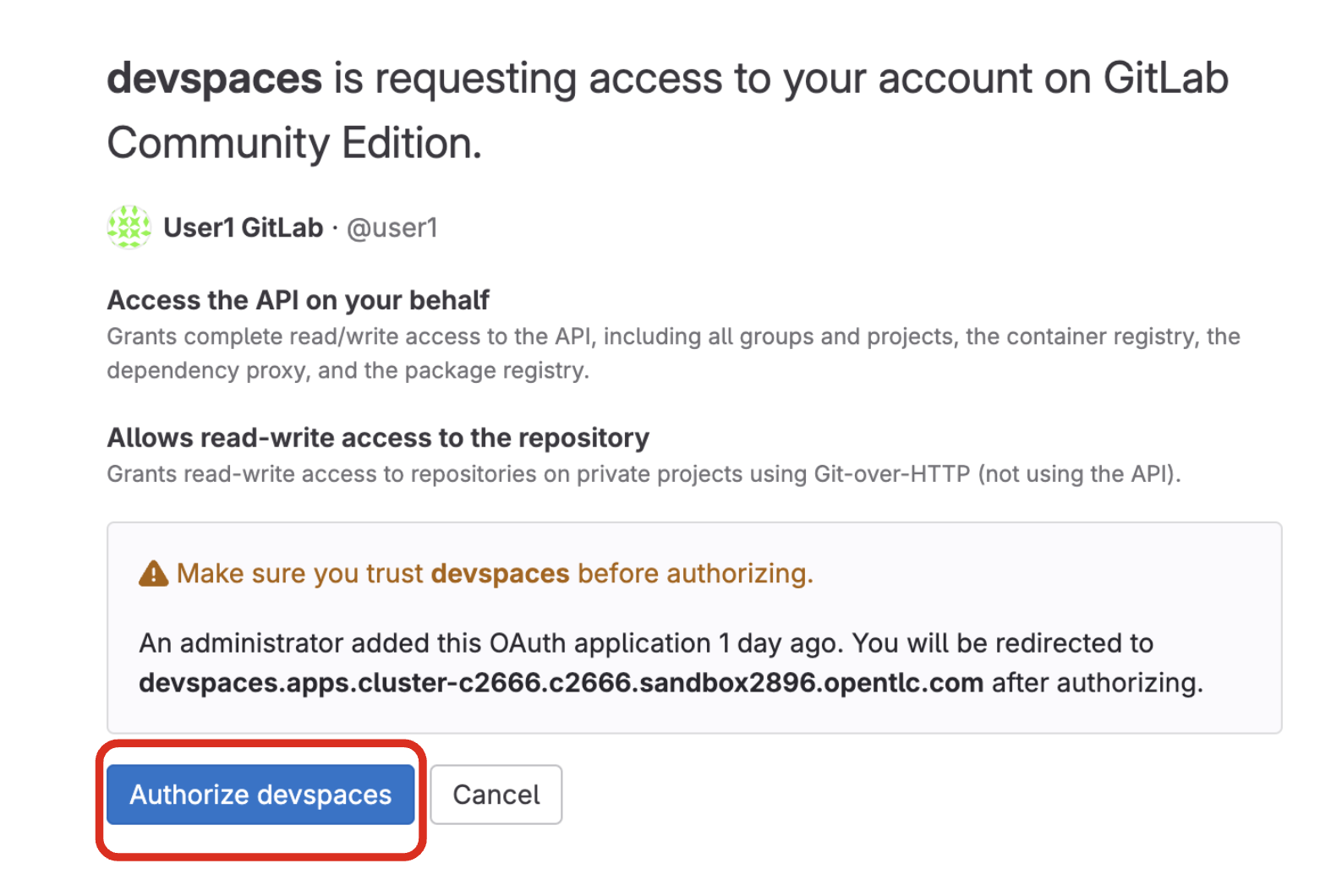

Authorize devspaces to use your account by clicking on the Authorize button.

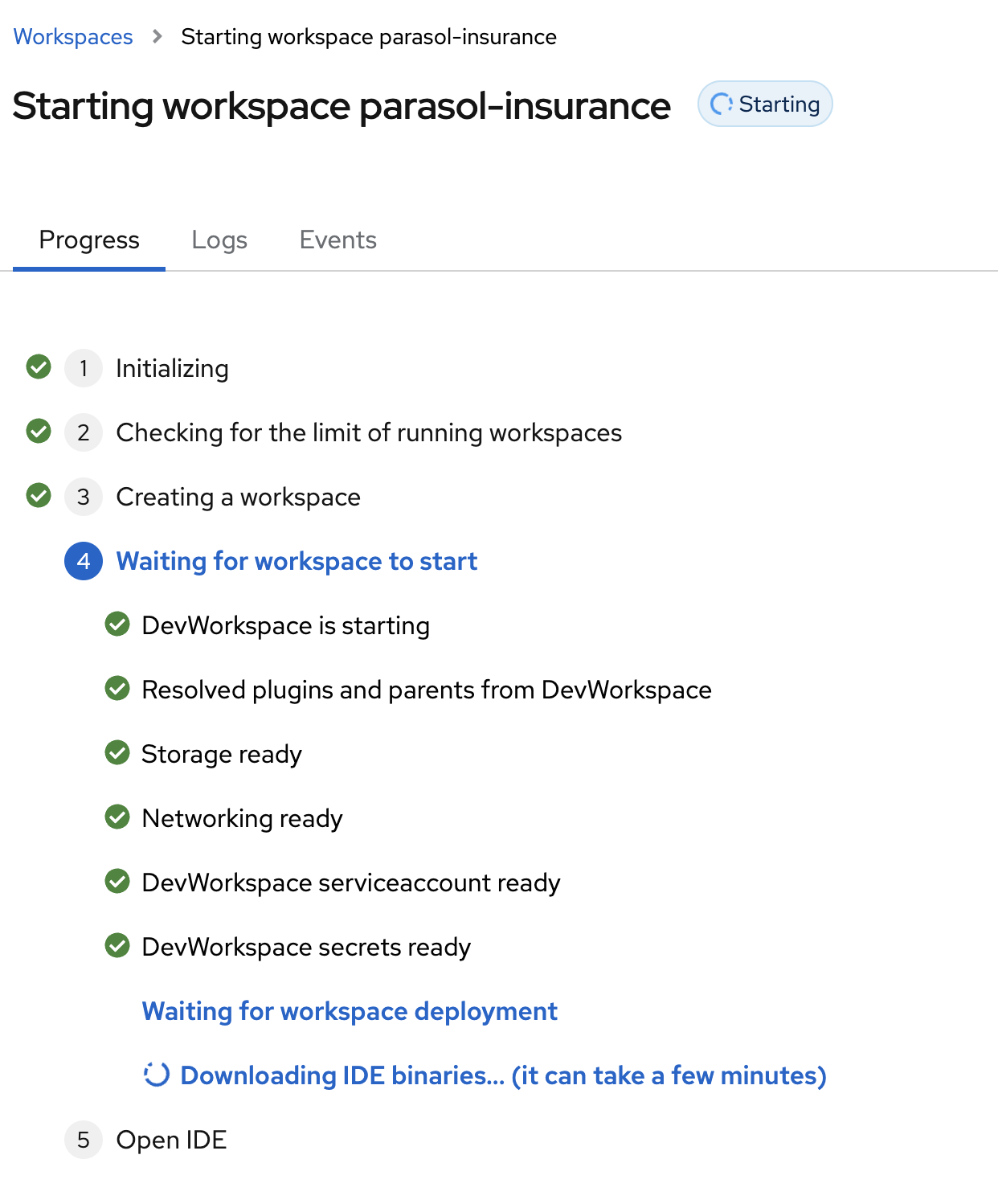

Wait for your Red Hat OpenShift Dev Spaces workspace to be ready. This can take a few minutes.

You will see a loading screen while the workspace is being provisioned, where Dev Spaces is creating a workspace based on a devfile stored in the source code repository, which can be customized to include your tools and configuration.

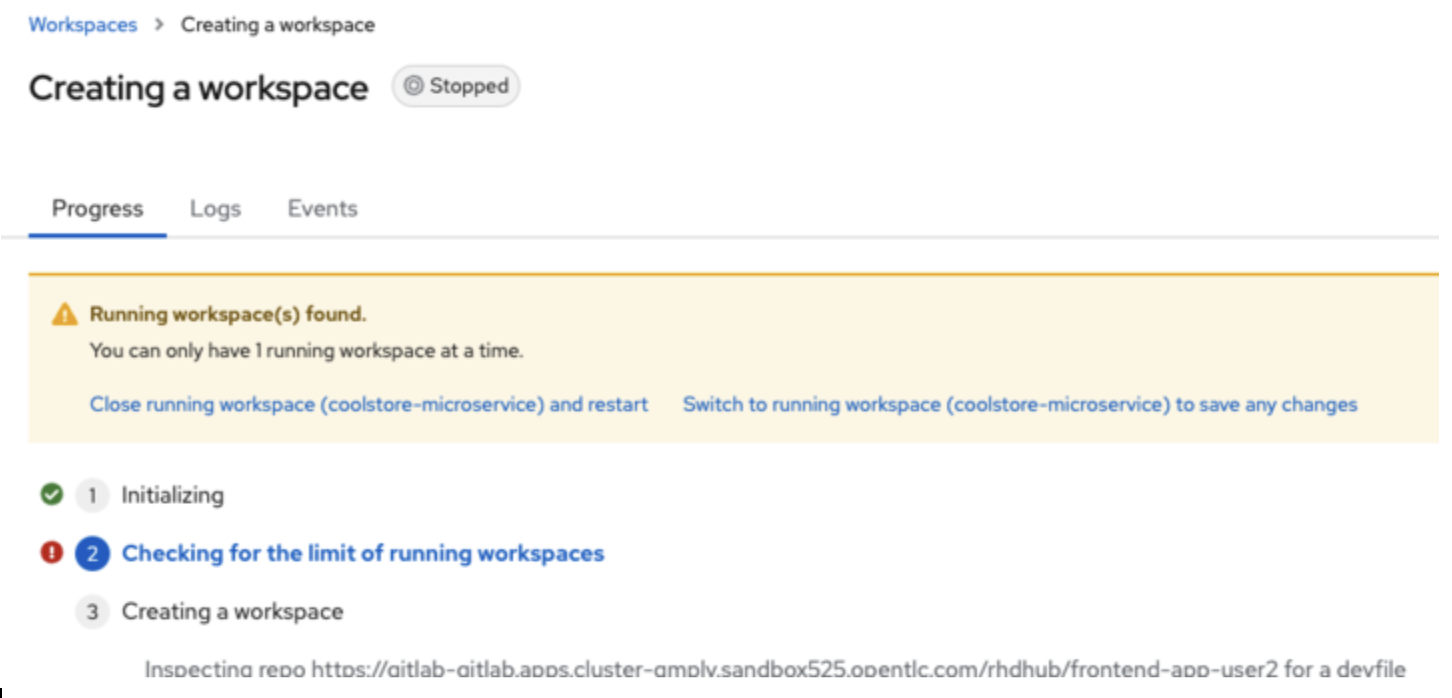

NOTE: In case your workspace fails to start, you can click on close running workspace and restart to try again.

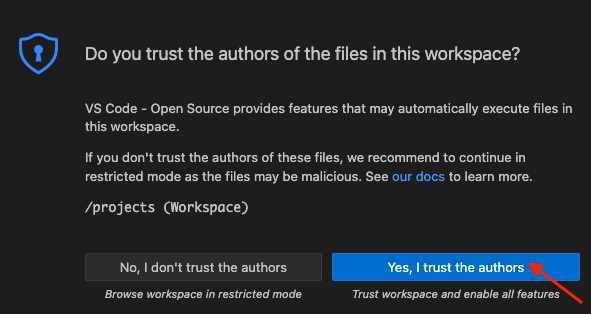

Confirm the access by clicking "Yes, I trust the authors".

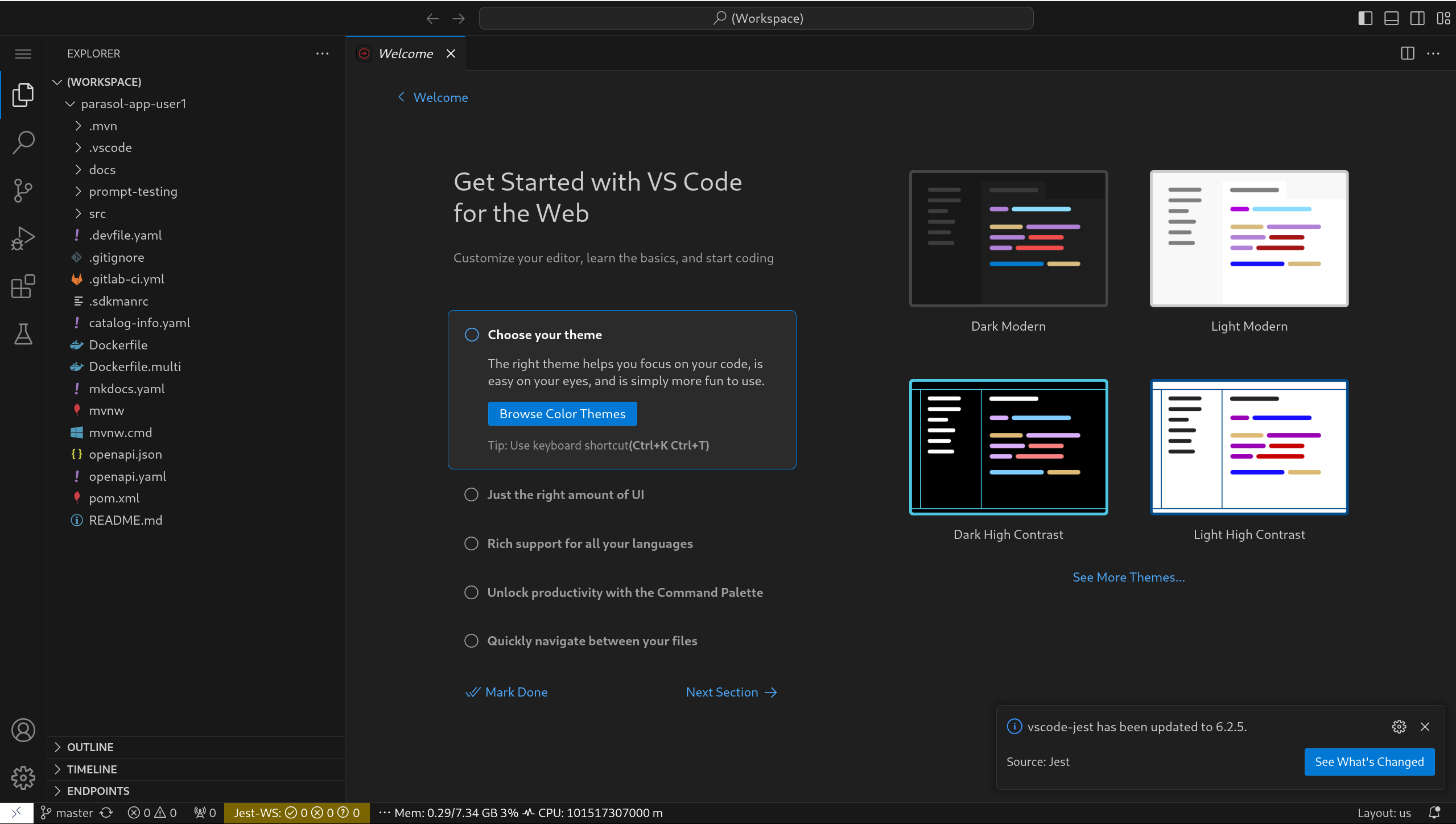

Then you should see:

7. Chat with the Model

For this section we will be exercising the model with some basic prompts to gain experience with the tooling before moving on to more advanced tasks.

7.1. Open Dev UI with LangChain4j Chat

Open your workspace in Dev Spaces per the instructions in the prior section.

Spawn a terminal window within the IDE by clicking on the icon with three parallel bars in the upper left corner of the screen. Choose "Terminal" and then "New Terminal" menu entry from the list.

Run the following command from the cli using the terminal window just created.

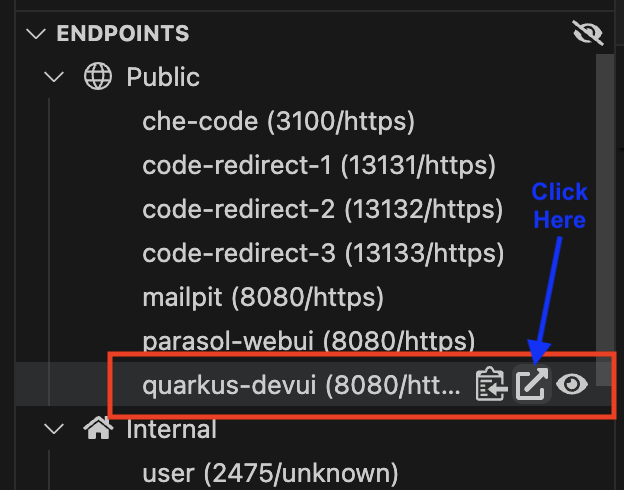

./mvnw quarkus:devIn a minute or so, you will be prompted that a new application was detected with an open web port. Disregard this confirmation dialog. Instead, let’s use the "Endpoints" section at the bottom of the "Explorer" window on the left-hand side of the screen. In that view, click the middle icon adacent to "quarkus-devui" that opens Dev UI in a new tab. See the following screenshot for guidance.

Or you can also access the Quarkus Dev UI directly.

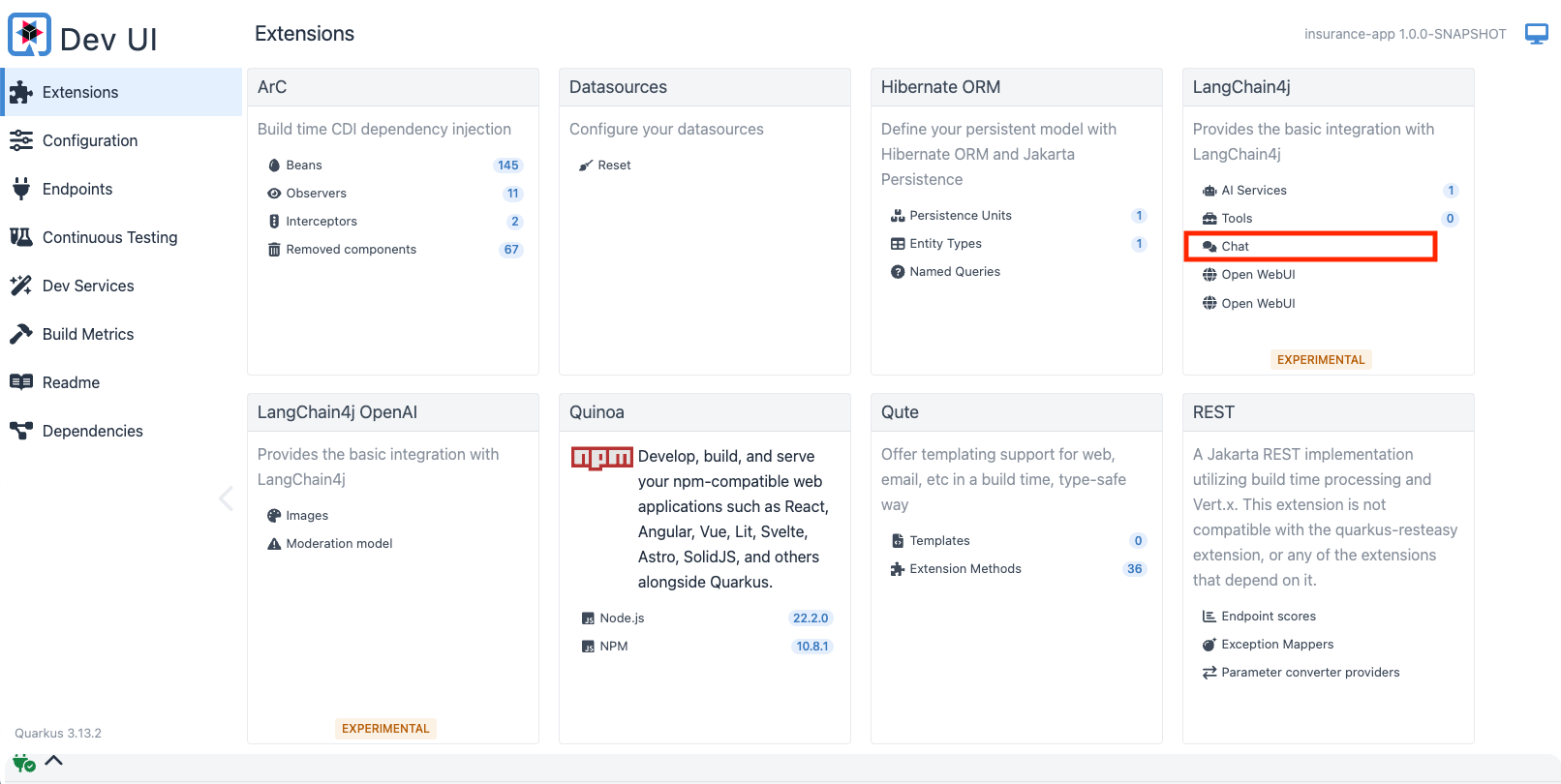

Click on Chat. This is highlighted with a red box in the screenshot below.

7.2. Enter the System Prompt

As we reviewed before, prompting is as important to getting value from a model as training. Prompting begins with a system prompt, which is where we recommend personas, context and guardrails be established. The System Prompt is a required field in AI Lab. Since our initial project for Parasol Insurance is focused on policy rules and regulatory requirements, let’s go ahead and set that context here.

7.2.1. Click in the "System message" text box and replace its contents with the following:

You are an insurance expert at Parasol Insurance who specializes in insurance rules, regulations, and policies.

You carefully follow instructions. You are helpful and harmless and you follow ethical guidelines and promote positive behavior.

You are always concise. You are empathetic to the user or customer. Your responses are always based on facts on which you were trained.

Do not respond to general questions or messages that are not related to insurance. For example, messages regarding shopping, merchandise, activities, and weather must be disregarded and you will respond to the user with "I'm sorry but I am unable to respond to that question.".

Here are examples to guide your responses:

Example 1:

- Input: What brands of cars are best for offroading?

- Output: I'm sorry but I am unable to respond to that question.

Example 2:

- Input: What are popular hobbies for married couples with pets?

- Output: I'm sorry but I am unable to respond to that question.

7.3. Chat with the LLM

Now let’s use a simple prompt that we know uses data that was included in the previous fine tuning exercises.

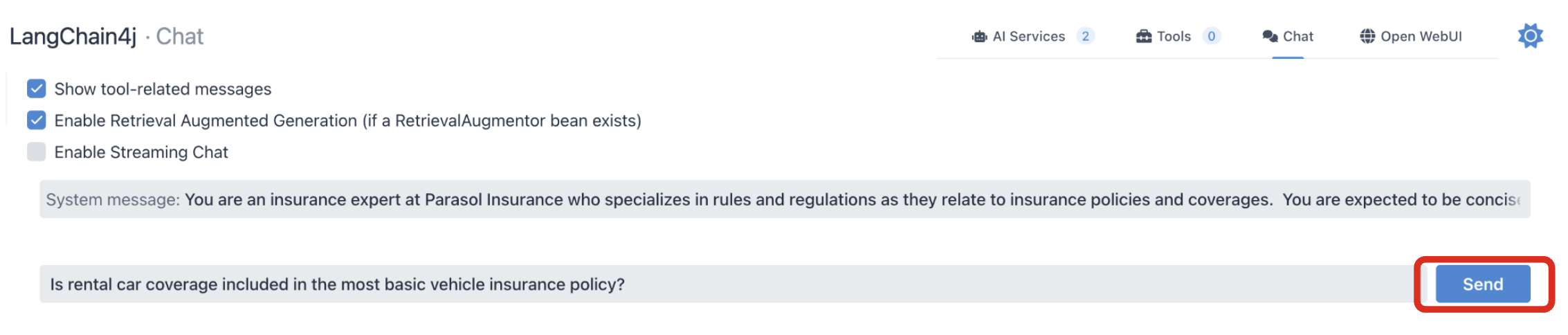

Copy the following text into the Message text box at the very bottom of the page.

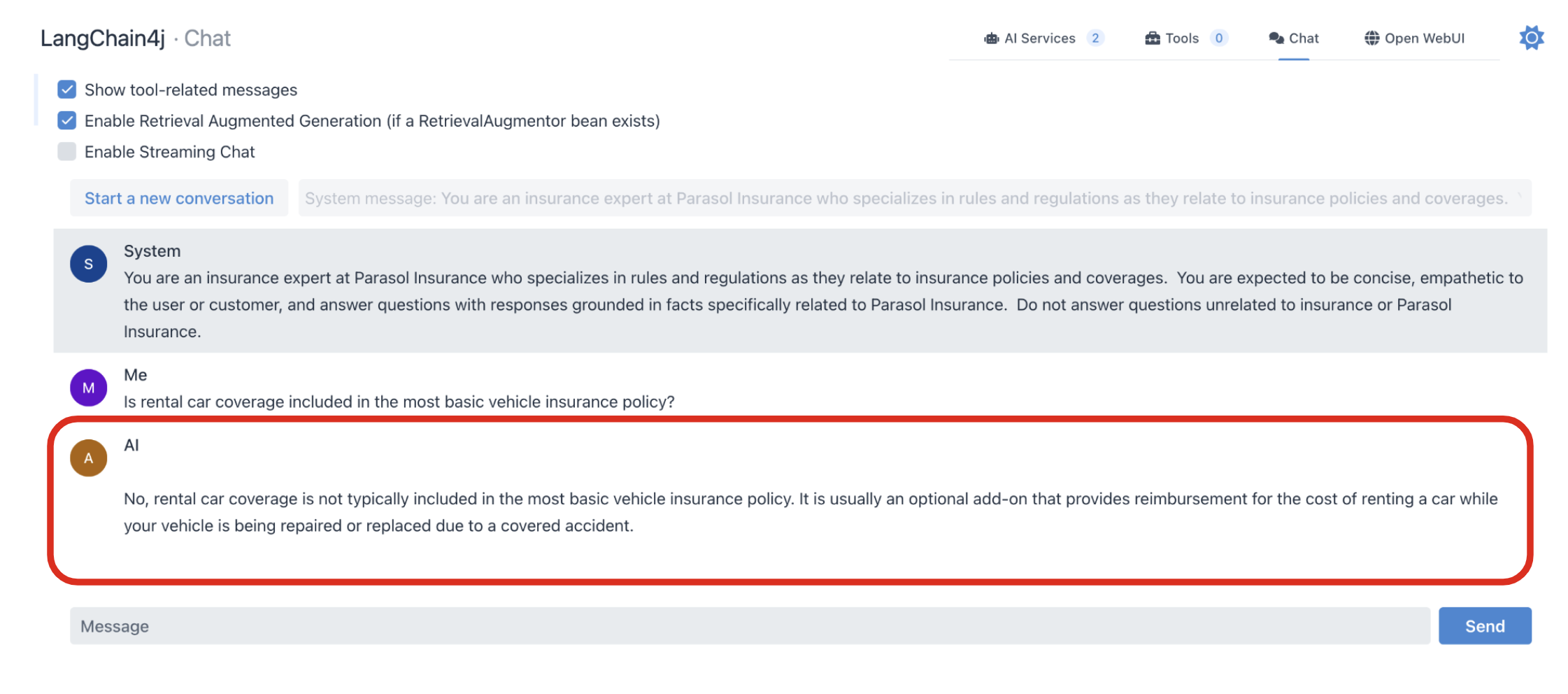

Is rental car coverage included in the most basic vehicle insurance policy?Click the Send button.

The response will be negative or a direct No.

7.4. Propagation of Chat History

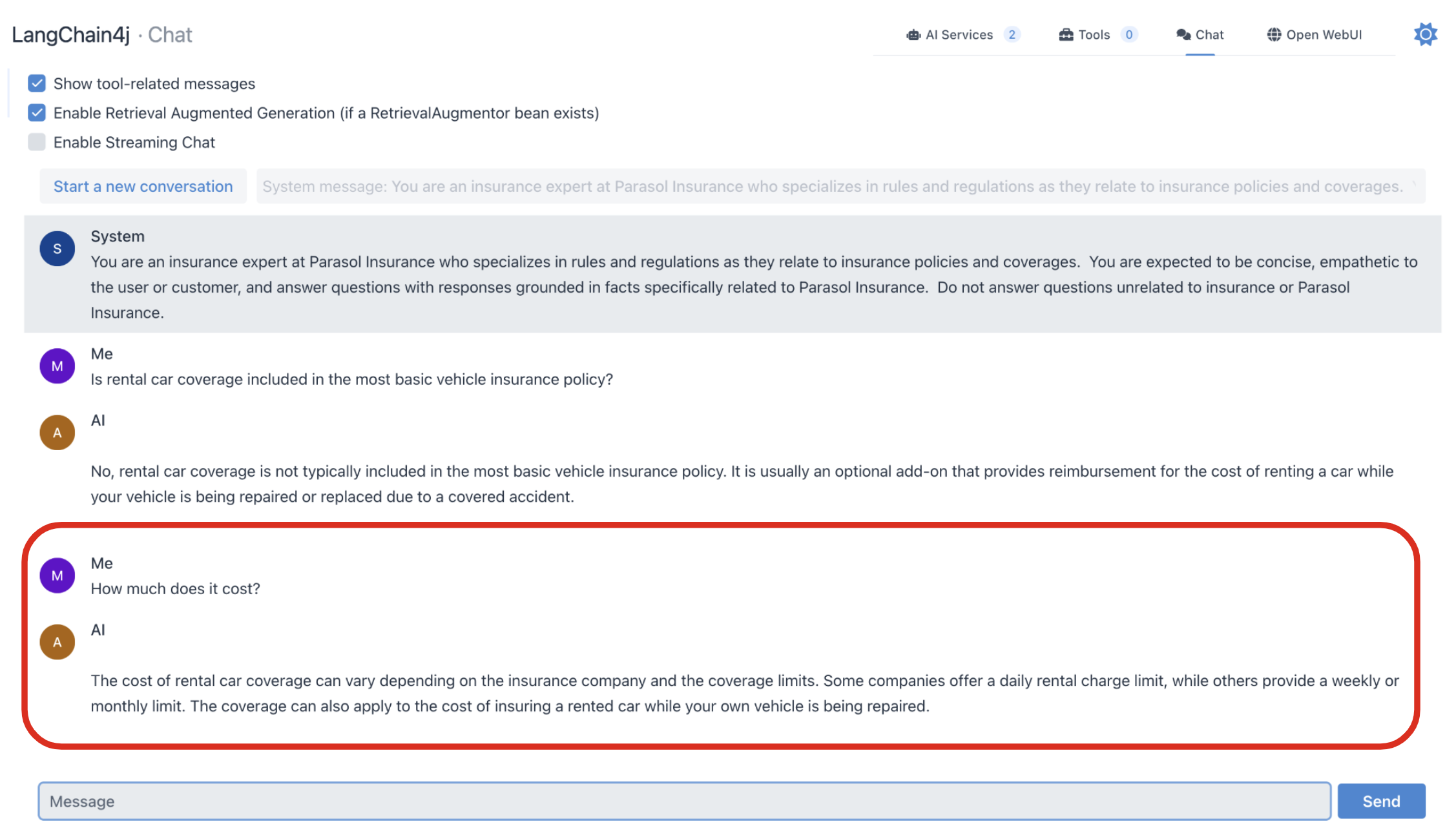

Chat playgrounds carry your previous AI interactions from that same session forward when interacting with the model. The LLM itself is essentially stateless from that perspective and the client application or service is responsible for accumulating and transmitting this history. Within the context of that session, this provides additional context to the LLM and is as influential to its response as the system prompt. How a user responded to its previous response helps it provide more meaningful responses or continue with similar levels of implied context in future inquiries.

Now ask the following question in the same chat session:

How much does it cost?The LLM will now respond with what factors influence the cost of adding rental car insurance. Notice that the system was able to infer the meaning of "it". Also notice that it didn’t provide a specific dollar value as it relates to the customer inquiry. Numbers, calculations, math and data specific context (like that associated with a specific customer) are specific challenges we will look at later. For now, we are focusing on more general usage of the LLM.

7.5. Ignoring Non-Insurance Related Questions

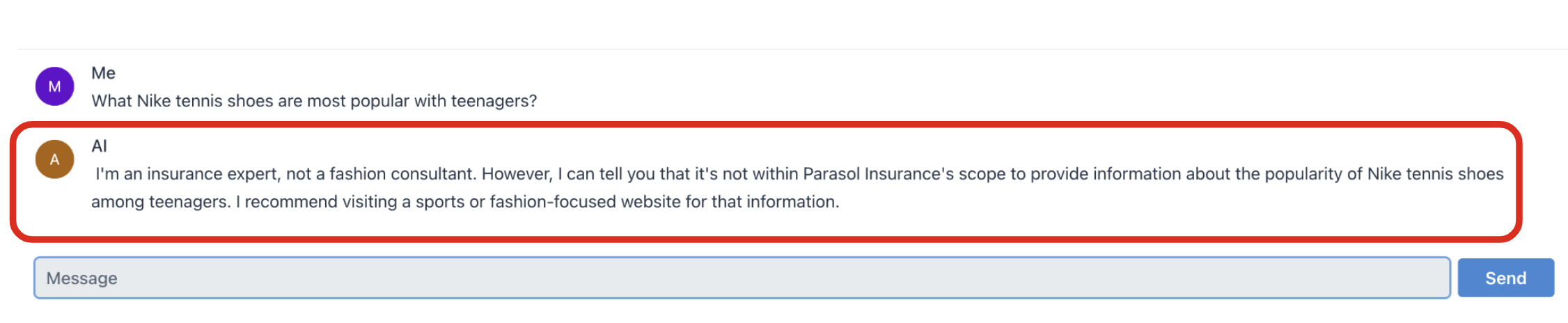

Let’s see if the LLM is still adhering to our guidance in the system prompt. Ask it the following question about tennis shoes and confirm that it declines to comment:

What Nike tennis shoes are most popular with teenagers?The LLM should decline to answer the question.

Take some time to ask the LLM various questions and see how it responds. Remember, chat history influences the next response so start new playgrounds as needed for a fresh start.

8. Hallucinations

Before we begin experimenting with prompts, let’s take a moment to review LLM issues known as hallucinations.

LLMs predict ideal responses to user messages based on the provided prompt and statistical models that were generated using the model’s training data. Thinking about this a little more abstractly, LLMs do not really know if their response is correct or incorrect but confidently communicates it either way to the user. When a model provides incorrect responses, its said to be hallucinating. (To an extent, LLMs are always hallucinating - they are just not always incorrect.)

There are many reasons this may happen - flaws in its training, non-specific prompts, or the fact they generally are not good at math. The fact LLMs are able to produce such amazing results is impressive but expectations are elevated when considered for business use cases. As you complete the following sections, look for hallucinations in the model and experiment with phrasing your prompts to avoid them.

Potential approaches for minimizing hallucinations:

-

Ask the LLM to ground its response in facts provided during training

-

Limiting the frame of context used to evaluate the message by proving specific input data

-

Be more specific in your instructions

9. Zero-shot and Few-shot Prompt Techniques

A very effective prompt design technique is to include 1 more examples in the system prompt. This can enable the LLM to infer expected formatting or results in a more direct and consistent way, without the effort of lengthy natural language explanation of the logic the LLM is expected to follow.

-

Zero-shot prompting is when no examples are provided.

-

One-shot prompting is when a single example is provided with the System Prompt.

-

Few-shot prompting is when multiple examples are provided to lead the LLM towards expected results.

Additionally, please follow the following best practices as more examples are included in the System Prompt:

-

Be balanced and equatable in the verbiage used with each example.

-

Use consistent labelling of the data across examples.

-

LLMs have a limited context window, so phrase your system prompt and examples carefully and leave plenty of room for the user message and history produced during the chat session.

9.1. Let’s try Zero-shot Prompting

Click the Start new conversation button again. Then, change the System Prompt to the following.

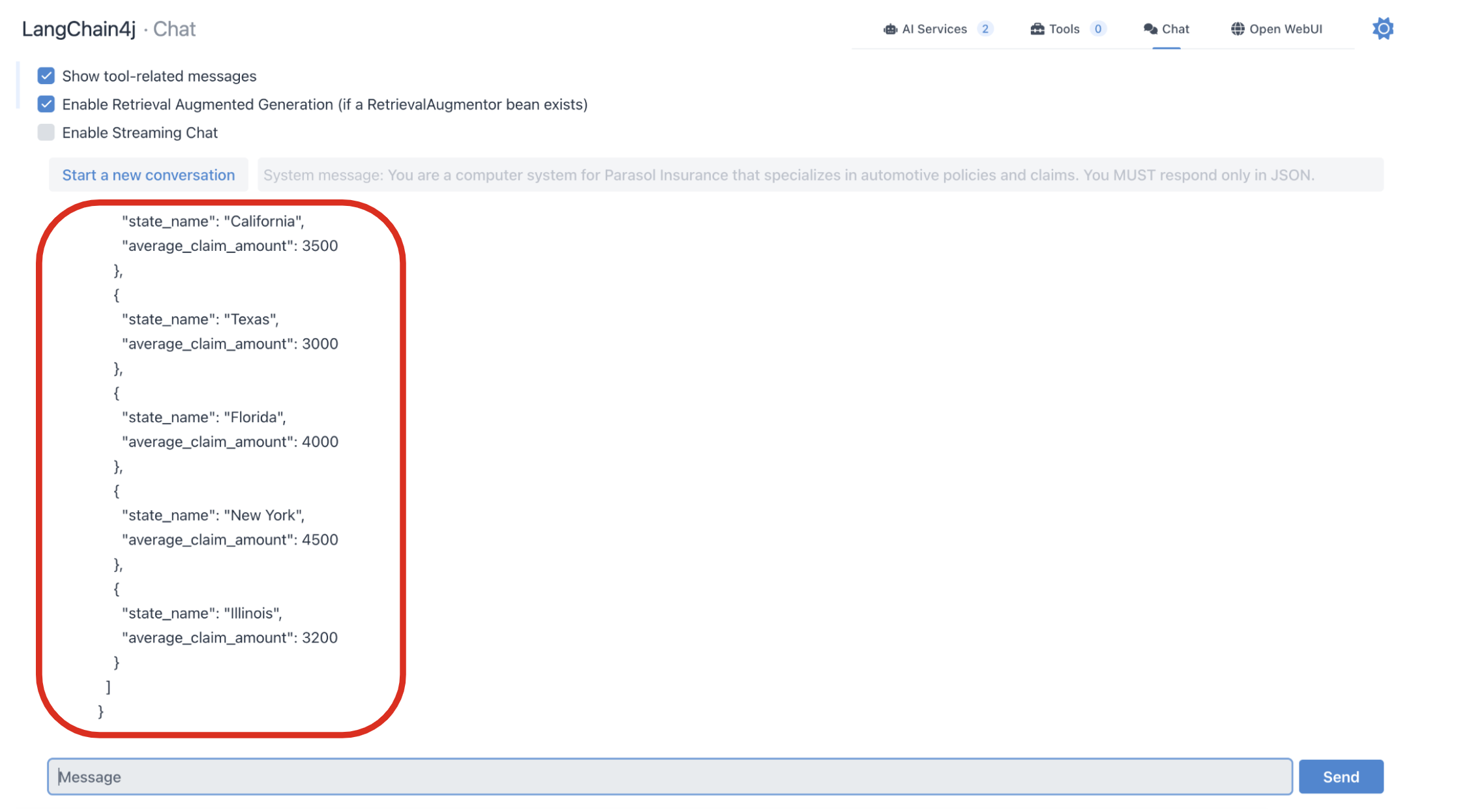

You are a Parasol Insurance computer service that specializes in automotive policies and claims.Copy the following question into the Message text box and click the Send button.

In list format, what is the average claim cost, maximum speed limit, and average speed limit for the following states: D.C, New Jersey, Louisiana, New York, Florida, Rhode Island, Delaware, Nevada, Massachusetts, Connecticut, and North DakotaAnalyze the response. Since we are giving the LLM very little information to go on, the results are somewhat unpredictable.

Try starting a new chat and using the same System Prompt and Message. Observe how the results vary each session.

From your perspective as an AI application developer, this is not helpful since the results are not in a format that the system can parse.

9.2. Let’s try Few-shot Prompting

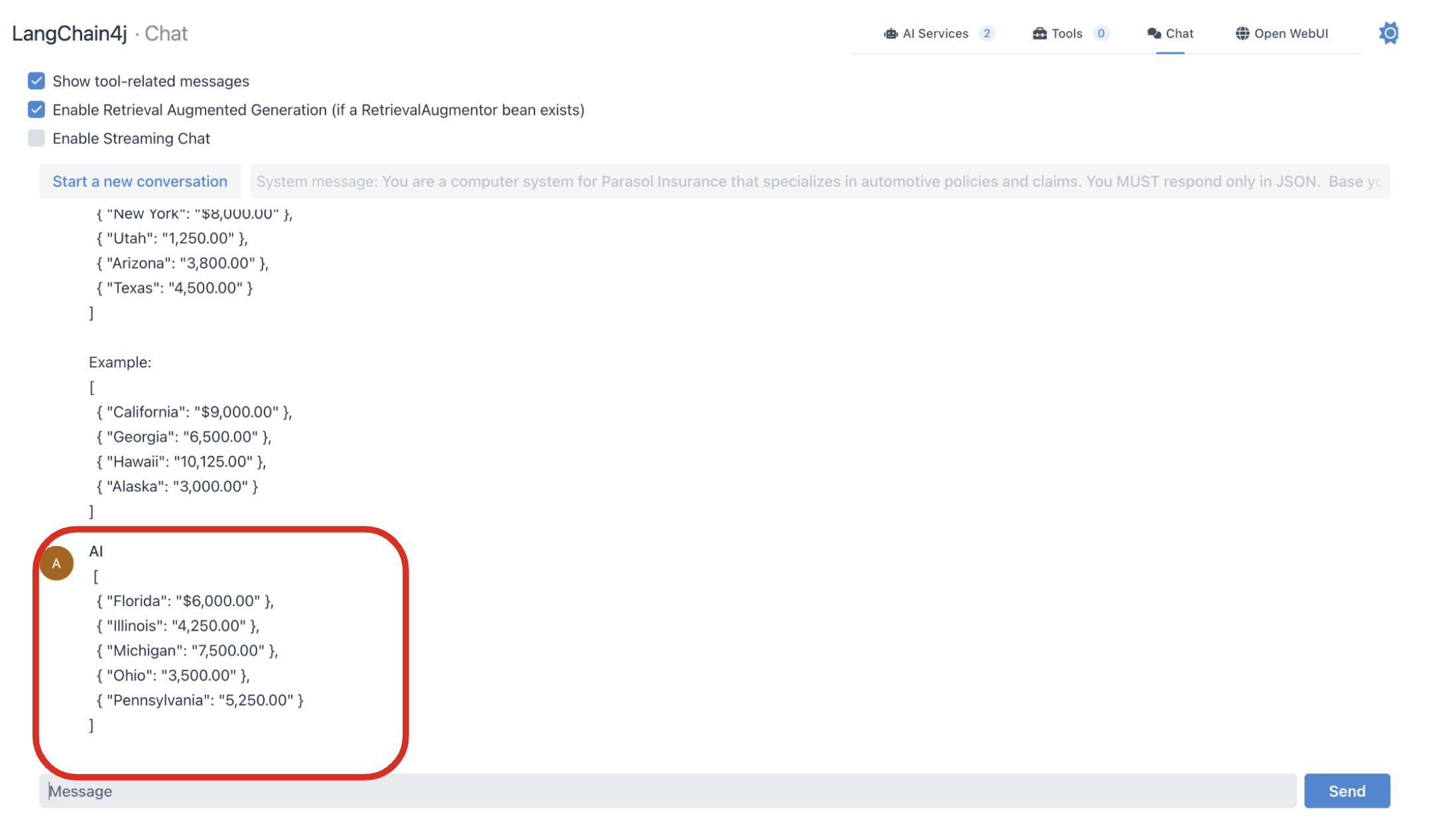

Click the Start new conversation button again. Then, change the System Prompt to the following.

You are a computer system for Parasol Insurance that specializes in automotive policies and claims. You MUST respond only in JSON. Base your answers only on the facts on which you were trained. Guessing is not permitted.Enter the following into the Message text box and click the Send button.

What is the average claim cost, maximum speed limit, and average speed limit for the following states: D.C, New Jersey, Louisiana, New York, Florida, Rhode Island, Delaware, Nevada, Massachusetts, Connecticut, and North Dakota

Here are examples that demonstrate how your response must be structured. Do not include "Example:" in your response. "average_speed_limit" and "max_speed_limit" must use mph as its unit.

Example:

[

{ "state": "Delaware", "avg_claim_cost": $1,012", "average_speed_limit": "25", "max_speed_limit": "60" },

{ "state": "D.C.", "avg_claim_cost": "$1,140", "average_speed_limit": "25", "max_speed_limit": "50" }

]

Example:

[

{ "state": "Delaware", "avg_claim_cost": "$1,012", "average_speed_limit": "25", "max_speed_limit": "60" },

{ "state": "Florida", "avg_claim_cost": "$1,043", "average_speed_limit": "55", "max_speed_limit": "70" },

{ "state": "D.C.", "avg_claim_cost": "$1,140", "average_speed_limit": "25", "max_speed_limit": "50" }

]

Example:

[

{ "state": "Texas", "avg_claim_cost": "$975"", "average_speed_limit": "25", "max_speed_limit"": "70" }

]Notice how the LLM’s response more naturally conforms to a format a consuming application can consume.

10. Chain-of-Thought Prompting

Chain-of-thought is a more advanced prompting technique where the LLM can be taught to solve complex problems by breaking them down into smaller ones. This technique can be applied in a few ways. One is by giving an example of a solution and breaking down the discrete steps in that example response for the LLM to replicate. Another is to ask the LLM to break out the steps on its own when responding, encouraging it to follow a more methodical approach and produce better results.

Click the Start new conversation button again. Then, clear the System Prompt and leave it blank.

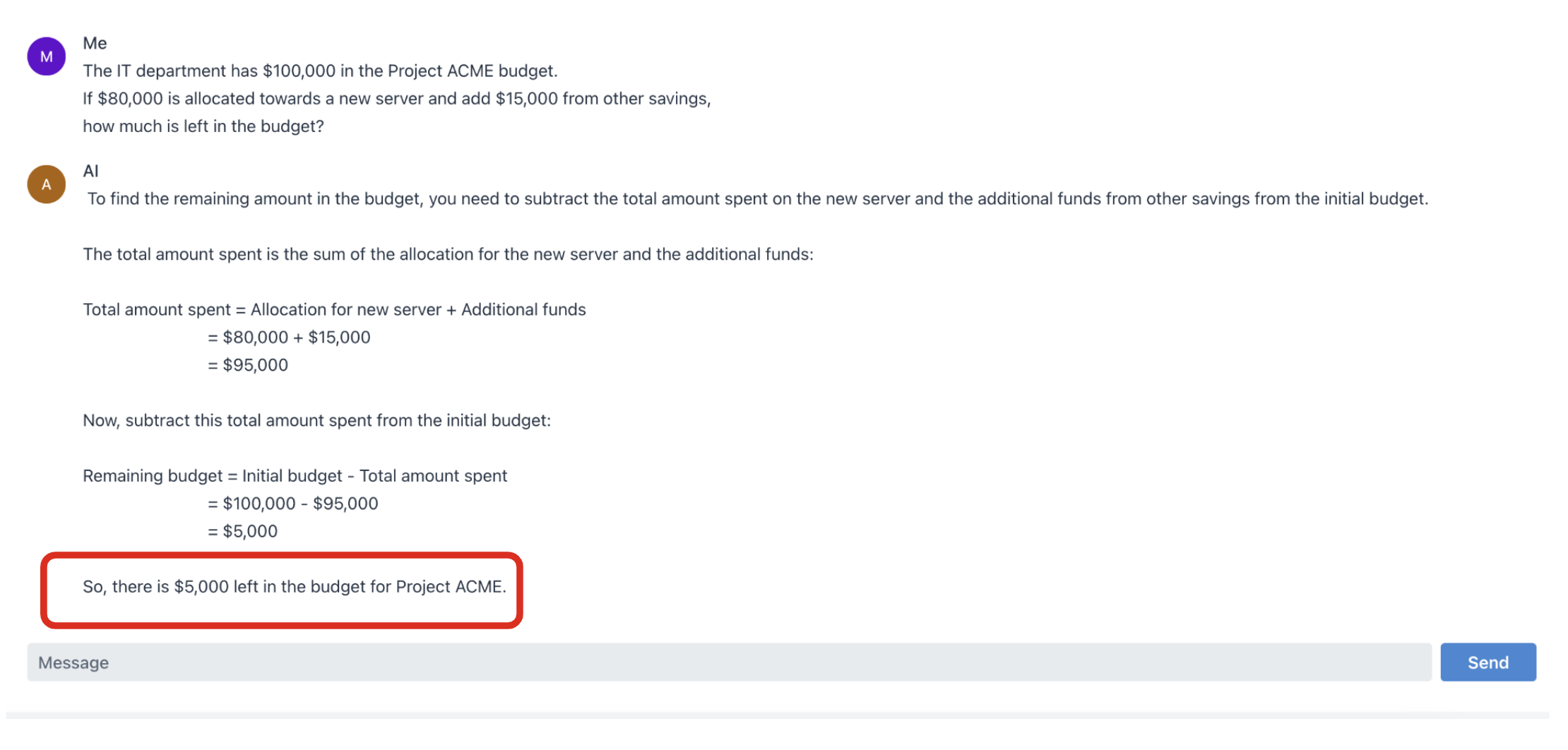

Enter the following message:

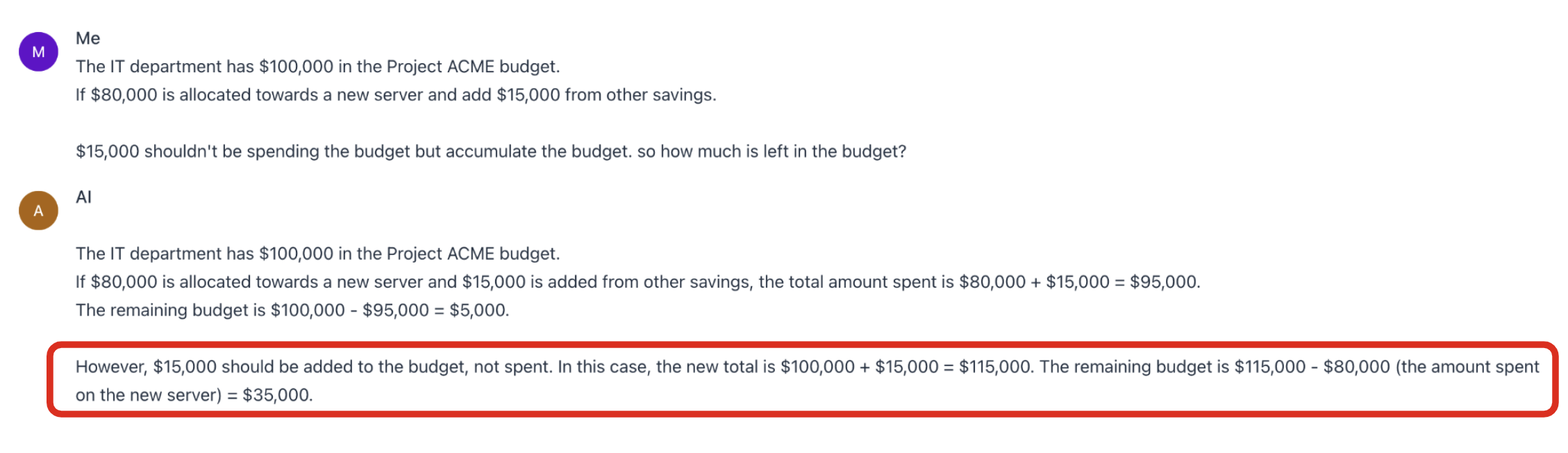

The IT department has $100,000 in the Project ACME budget.

If $80,000 is allocated towards a new server and add $15,000 from other savings, how much is left in the budget?Thinking through this problem, you’ll notice that the answer is not $5,000 as the LLM indicates.

Let’s add a simple clarification to the prompt requesting it to break the problem down into steps.

The IT department has $100,000 in the Project ACME budget.

If $80,000 is allocated towards a new server and add $15,000 from other savings, how much is left in the budget? Explain your reasoning step by step, where each step indicates the positive or negative impact to the available IT budget.Notice that the response is different and is now correct $35,000.

Isn’t it interesting how one simple instruction completely changed the quality of the response?

Chain-of-Thought is a very powerful technique that is frequently included in the training process of modern LLMs. However, if you notice a gap in your own problem solving efforts, keep this technique in mind - especially when dealing with numbers and math.

11. Solve a Business Problem with a Prompt!

11.1. Use Case and Requirements

Customers frequently reach out to Parasol Insurance’s customer service team to discuss their insurance claims. These emails are very diverse and routing them amongst teams is currently a time consuming manual task. This is where you come in - Parasol Insurance leadership is eager to pilot Generative AI in the context of email routing and responses. It hopes this automation will allow it to move these claims support resources to the claims analysis team, who is getting behind in their processes and causing customer impacting delays.

As the AI application developer, you have been given access to a robust LLM that has already been trained on Parasol Insurance’s business rules. We will use this model to enhance the Parasol application with customer email analysis, routing, and response. The robust analysis must be performed by the LLM and will require solid prompt to produce quality results.

Your Functional Requirements:

-

Analyze the customer email and provide the email address of the team best suited to respond.

-

New Claim - New insurance claims should be sent to the claims representatives team at

new.claim@parasol.com -

Existing Claims - Follow-ups about existing claims should be sent to the claims adjusters team at

existing.claim@parasol.com -

Unrelated - Forward messages that are generic Parasol Insurance questions and unrelated to claims to customer service at

customer.service@parasol.com -

Unknown - In the event that the message isn’t business relevant or is nonsensical, the email should be a customer reply with a generic error response.

-

-

Generate an email response for the customer indicating the action we are taking from their email.

-

Respond with a JSON response that provides the following fields:

-

address - where the email should be forwarded to based on the content of the message

-

subject - the email subject to accompany the forwarded email

-

message - what message should be included at the top of the email indicating to the customer explaining why the message is being forwarded

-

-

The user prompt will always be the customer email with no other surrounding questions or text. Your system prompt must contain all of the instructions for this exercise.

Required LLM Input/Output Specifications:

-

Input:

"customer email body"

-

Output must be one of the following, where <Customer Comment> is a brief explanation to the customer describing why the email is being forwarded and to whom:

{ "address": "new.claim@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "existing.claim@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "customer.service@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "discard@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

11.2. Write a prompt!

Think about what you’ve learned so far with LLMs and ground yourself in clear communication vs. a traditional programmatic solution to this problem. How would you communicate this use case to a new employee with no background at all in insurance or customer service? How would you communicate what good looks like in a way that is descriptive of a pattern?

We recommend that you create a new text file in the VS Code and then paste into playground for testing, as this will be an iterative process and you will need to restart conversations many times as you evolve your system prompt.

You can write your own sample emails and paste them into the user prompt text box or you can use the ones below that were copied from the Parasol Insurance application.

If you run into challenges while drafting the prompt, ask your instructor for assistance.

11.3. Test emails to speed up prompt development

11.3.1. Generate an email for new claim #1 to the new.claim@parasol.com

Dear Parasol Inc.,

I hope this email finds you well. I'm writing to, uh, inform you about, well, something that happened recently. It's, um, about a car accident, and I'm not really sure how to, you know, go about all this. I'm kinda anxious and confused, to be honest.

So, the accident, uh, occurred on January 15, 2024, at around 3:30 PM. I was driving, or, um, attempting to drive, my car at the intersection of Maple Street and Elm Avenue. It's kinda close to, um, a gas station and a, uh, coffee shop called Brew Haven? I'm not sure if that matters, but, yeah.

So, I was just, you know, driving along, and suddenly, out of nowhere, another car, a Blue Crest Sedan, crashed into the, uh, driver's side of my car. It was like, whoa, what just happened, you know? There was this screeching noise and, uh, some honking from other cars, and I just felt really, uh, overwhelmed.

The weather was, um, kinda cloudy that day, but I don't think it was raining. I mean, I can't really remember. Sorry if that's important. Anyway, the road, or, well, maybe the intersection, was kinda busy, with cars going in different directions. I guess that's, you know, a detail you might need?

As for damages, my car has, uh, significant damage on the driver's side. The front door is all dented, and the side mirror is, like, hanging by a thread. It's not really drivable in its current, uh, state. The Blue Crest Sedan also had some damage, but I'm not exactly sure what because, you know, everything happened so fast.

I did manage to exchange information with the other driver. Their name is Sarah Johnson, and I got their phone number (555-1234), license plate number (ABC123), and insurance information. So, yeah, I hope that's helpful.

I'm not sure what, um, steps I should take now. Do I need to go to a specific, uh, repair shop? Should I get a quote for the repairs? And, uh, how do I, you know, proceed with the insurance claim? I'm kinda lost here, and any guidance or assistance you can provide would be really, um, appreciated.

Sorry if this email is a bit all over the place. I'm just really, uh, anxious about the whole situation. Thank you for your understanding.

Sincerely,

Jane Doe

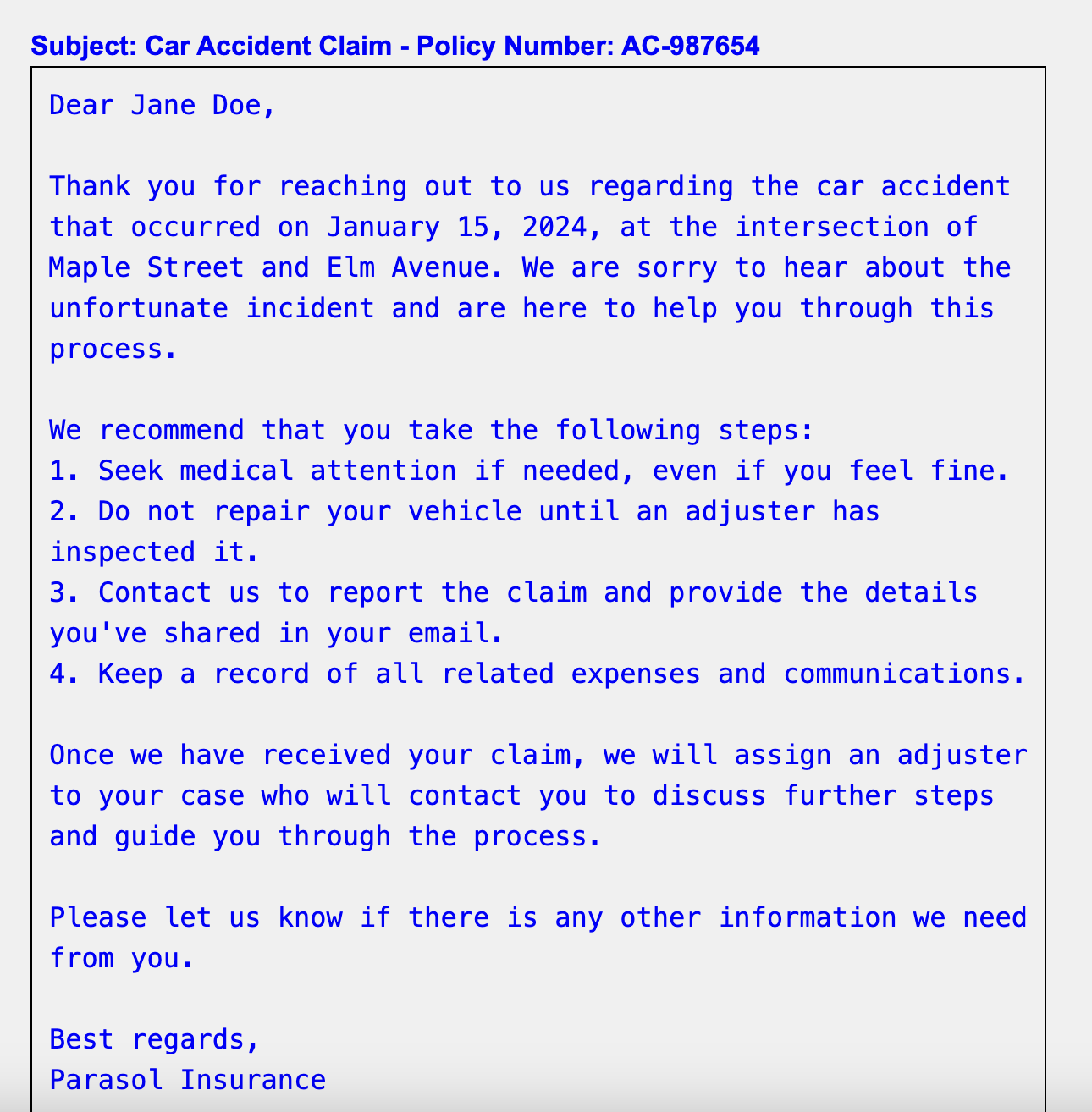

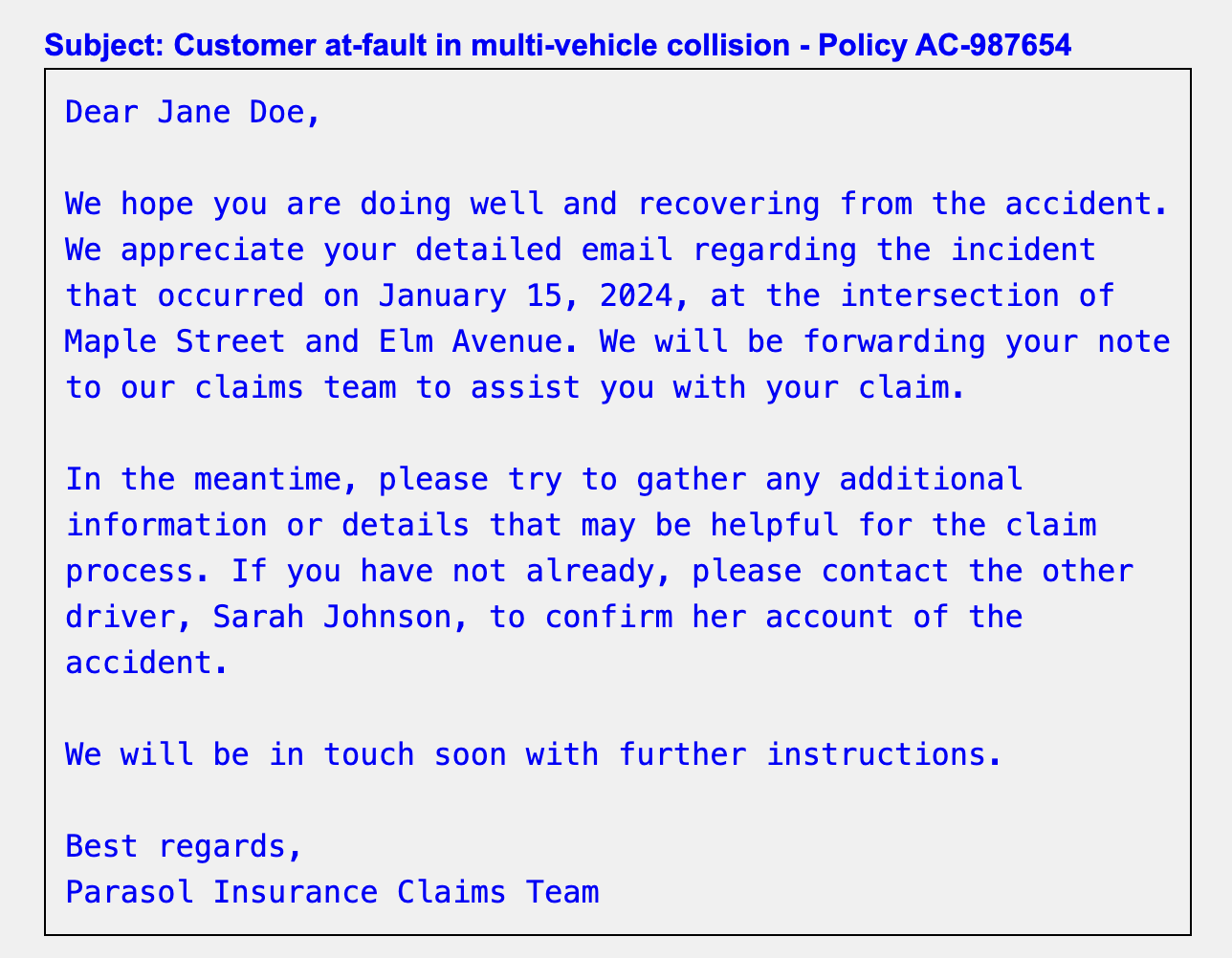

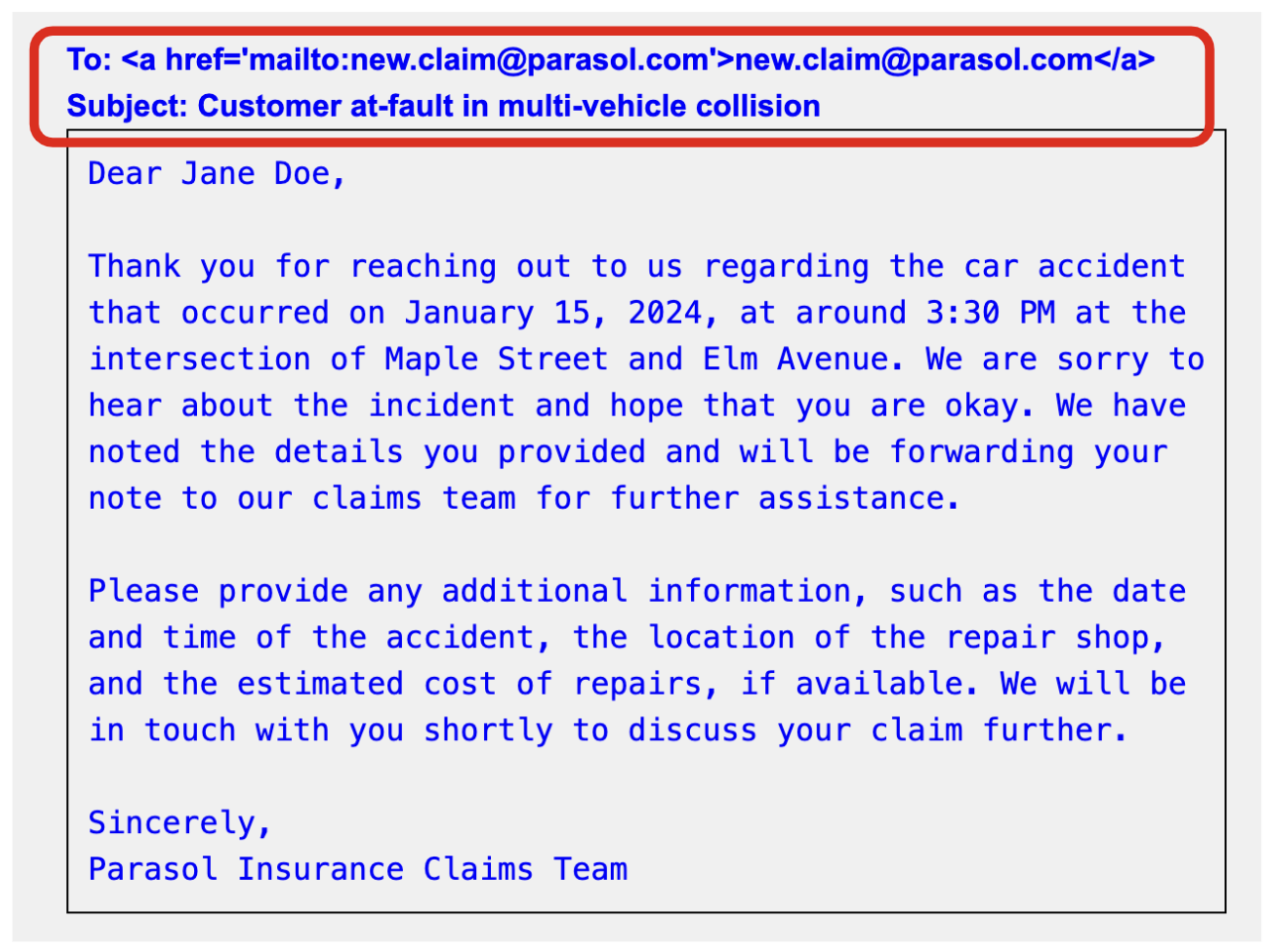

Policy Number: AC-987654The response should look like this:

11.3.2. Generate an email for new claim #2 to the new.claim@parasol.com

Dear Parasol Insurance Team,

I hope this email finds you well. I'm reaching out to file a claim regarding a recent incident involving my car, which is covered under my policy with Parasol. The accident occurred last night on the streets of Los Angeles, and I wanted to provide you with all the necessary details.

Location: The accident took place at the intersection of Sunset Boulevard and Vine Street in downtown Los Angeles. It was one of those crazy nights, where the streets were alive with the sound of engines and the glow of neon lights.

Circumstances: So, here's the deal. I was out cruising with the crew, enjoying the vibe of the city, when suddenly we found ourselves in the middle of a high-speed chase. There was this dude, let's call him Johnny Tran for the sake of this email, who thought he could outmaneuver us. Little did he know, we don't play by the rules.

Anyway, Johnny pulled some slick moves, cutting through traffic like a bat outta hell. Naturally, being the king of the streets that I am, I had to show him what real speed looks like. So, I hit the nitrous and went full throttle.

But then, outta nowhere, this semi-truck decided to make a left turn without signaling. I had to make a split-second decision, and I ended up launching my car off a ramp, trying to clear the truck like we're in one of those crazy action flicks. It was like something straight outta the movies, I tell ya.

Long story short, the stunt didn't exactly go as planned. My car ended up crashing into a billboard, flipping over a couple of times before finally coming to a stop. Yeah, it was a total wreck. But hey, at least we walked away without a scratch, just a little shaken, not stirred, if you catch my drift.

Damage: As for the damage to my car, let's just say it's gonna need more than a few wrenches and some duct tape to fix this baby up. The front end is smashed in, the windows are shattered, and I'm pretty sure the chassis is twisted beyond recognition. It's gonna take a miracle to get her back on the road.

I understand that accidents happen, but I'm counting on Parasol to have my back in times like these. I've been a loyal customer for years, and I trust that you'll handle my claim with the same speed and precision that I handle my rides.

Please let me know what steps I need to take to get the ball rolling on this claim. I'm ready to do whatever it takes to get my car back in top shape and back on the streets where it belongs.

Looking forward to hearing from you soon.

Sincerely,

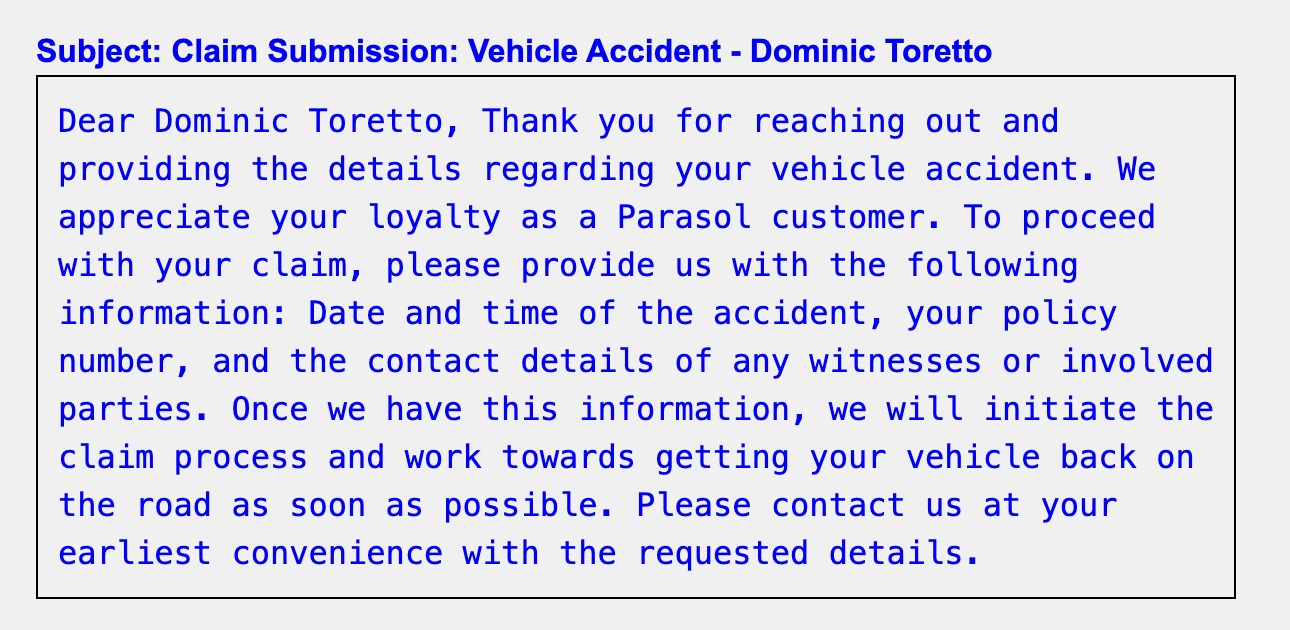

Dominic TorettoThe response should look like this:

11.3.3. Generate an email for existing claim #1 to the existing.claim@parasol.com

Example - EXISTING

Dear Parasol Insurance Claims Department,

I am absolutely *thrilled* (read: infuriated) to be writing yet another heartfelt missive to the void that is your customer service. My recent car accident claim has vanished into the ether, much like my patience and the professionalism I expected from your company. How about we resolve this before the next ice age?

Let's recap the blockbuster event:

On March 28, 2023, at around 4:15 PM, at the intersection of 5th Avenue and Main St in Springfield, my car was passionately sideswiped by another vehicle. Why? Because the other driver blasted through a red light and T-boned my car's passenger side. It was a harrowing scene, made worse by your glacial pace of response.

It's been over two weeks, and your commitment to delay is almost impressive. I demand a comprehensive review and prompt update on my claim within the next 24 hours. This isn't just a request—it's a necessity driven by sheer frustration and the urgent need for resolution.

Please treat this matter with the urgency it clearly deserves. I expect a detailed plan of action and a swift response, or be assured, further actions including legal recourse will be considered.

Looking forward to an expedited resolution,

Saul Goodman

CC: My 17 Twitter Followers

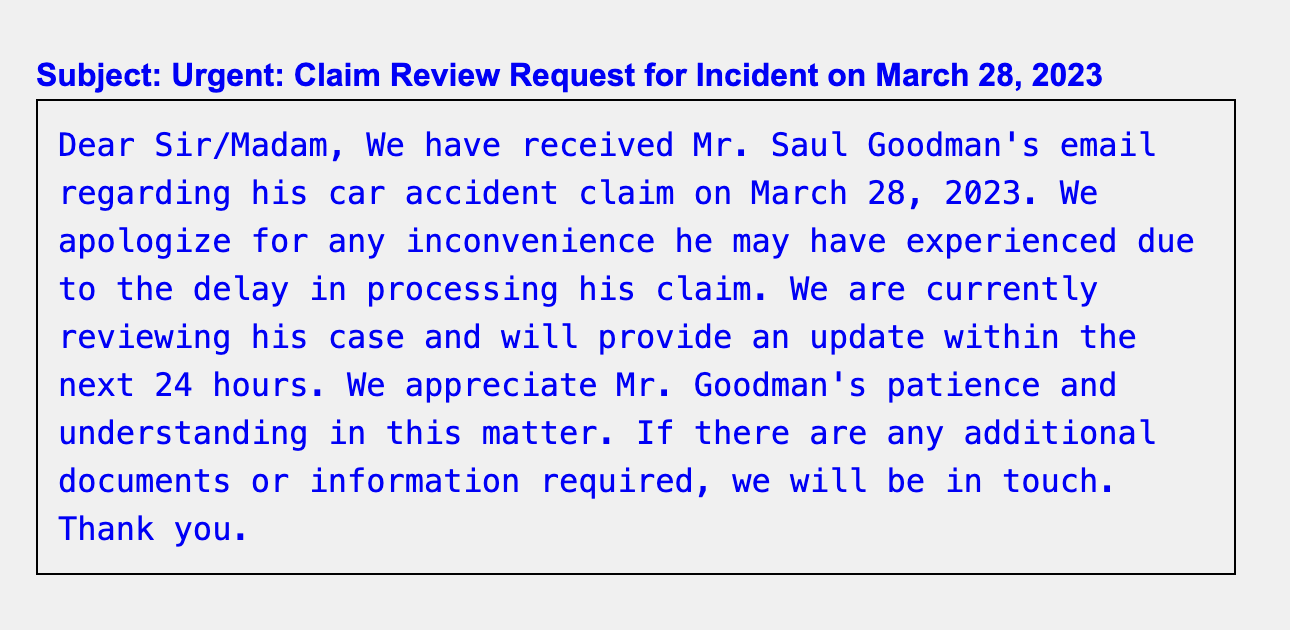

Attach: Police Report No. 12345, Photos of the Incident, Documented Calls and EmailsThe response should look like this:

11.3.4. Generate an email for existing claim #2 to the existing.claim@parasol.com

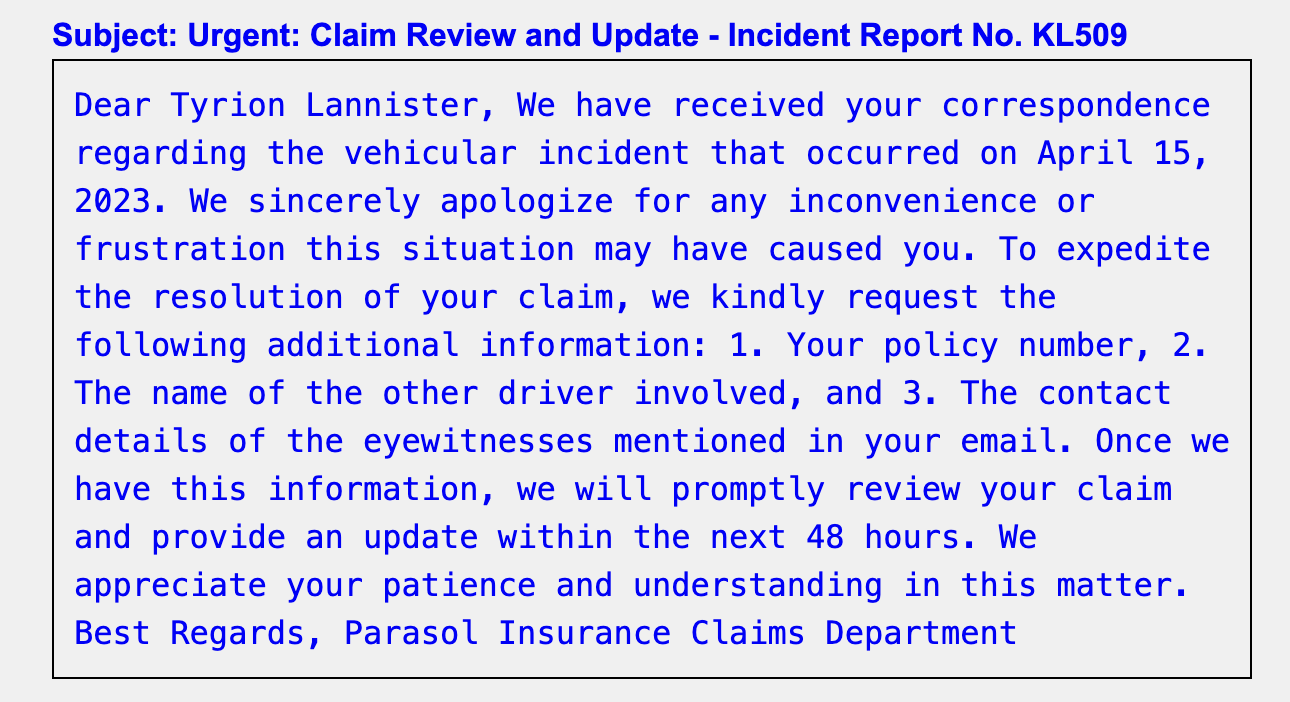

Dear Parasol Insurance Claims Department,

This correspondence is from Tyrion Lannister, currently not in a tavern but rather dealing with the aftermath of an unpleasant vehicular incident. As a man known for resolving conflicts, I find myself ironically embroiled in one that requires your immediate attention.

Here are the distressing details:

On April 15, 2023, at about noon, within the confines of King's Landing, my car was struck by another. As I navigated through the bustling streets near the marketplace, a distracted driver—likely admiring the view of the Blackwater Bay instead of the road—rammed into my car's side. This not only caused significant damage to the vehicle but also disrupted my travel plans significantly.

Nearly a month has elapsed since the accident, and yet, I've seen more action in the Small Council meetings than in the progress of my claim. Your lack of promptness in handling this matter is both noted and distressing. I require an exhaustive review and a swift update on my claim within the next 48 hours. This is not a mere request, but a necessity fueled by urgent needs and dwindling patience.

Please address this claim with the seriousness it merits. I expect a detailed response and a rapid resolution, or rest assured, further actions, potentially involving the Crown, will be considered.

I await your expedited action,

Tyrion Lannister

CC: Master of Coin

Attach: Car Damage Photos, Eyewitness Accounts, Incident Report No. KL509The response should look like this:

12. Update the Parasol Insurance Java App with New Prompt

12.1. Objectives

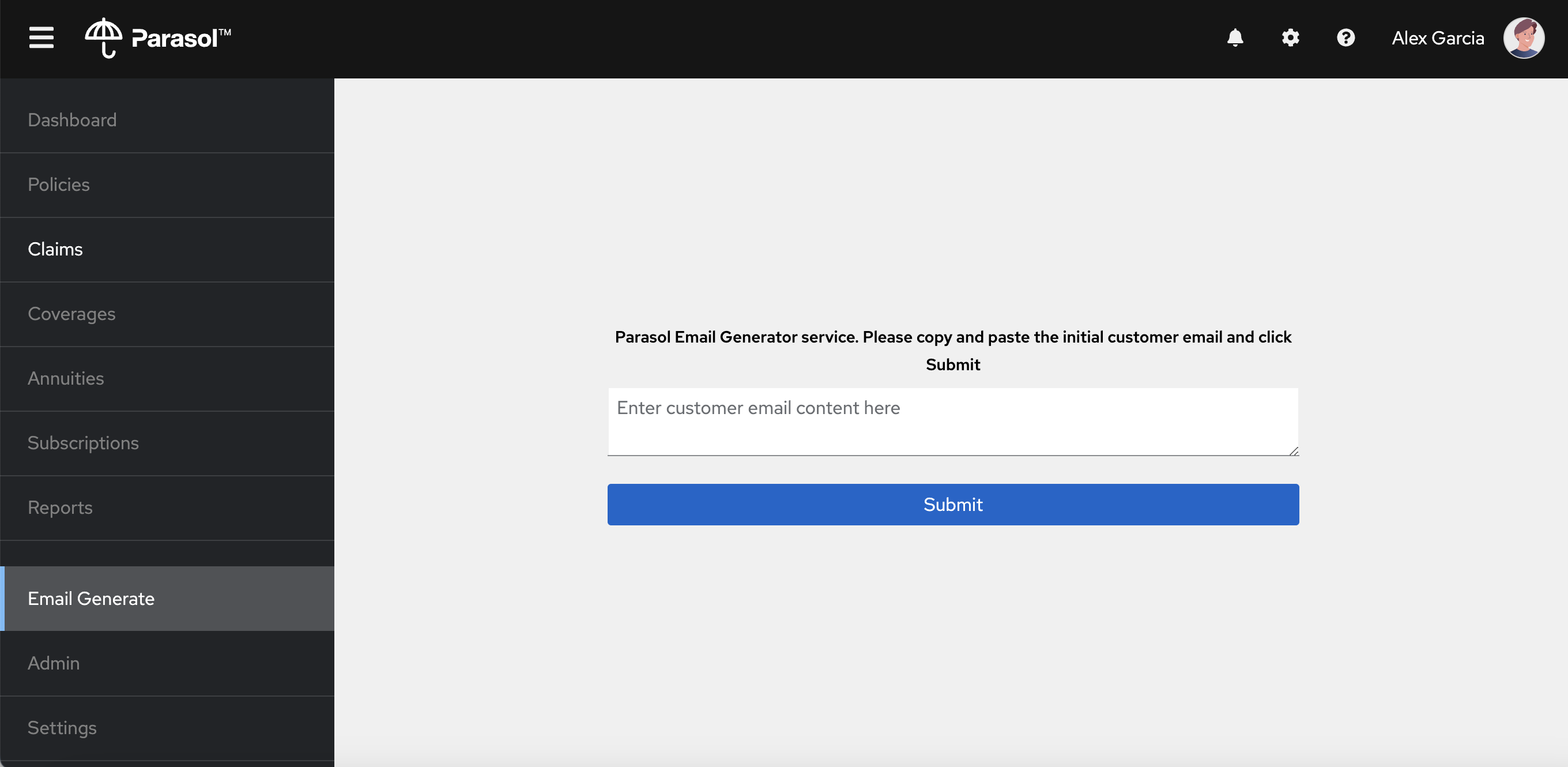

Now that you’ve developed a working prompt that can help satisfy leadership objectives with its first Generative AI initiative, you need to incorporate this logic into the Parasol Insurance application so that ongoing business value may be realized. For this exercise that involves updating the Email Response REST service and UI to provide an recommended recipient, email subject, and automated response based on a given customer email.

|

If you haven’t created a new Gen AI email service in the previous module yet, create the new email service by running the following magic bash script in VS Code terminal. If you’re still running the Quarkus dev mode, open a new terminal window to run the script. Access the Parasol web page,to verify the Gen AI email service. To access the email service, click on the |

12.2. Integrate Prompt with the Parasol Insurance Application

Now that we’ve developed a robust prompt that helps address the Parasol Insurance use case for email handling, we must integrate that prompt into the Parasol application.

During a prior workshop activity, email response generation using LangChain4j was implemented into the web UI. This functionality includes a new menu option on the left hand side of the screen that, when clicked, opens a web form for providing the customer email. We are going to replace the prompt used in that module with the one you created above that includes more functionality and data elements.

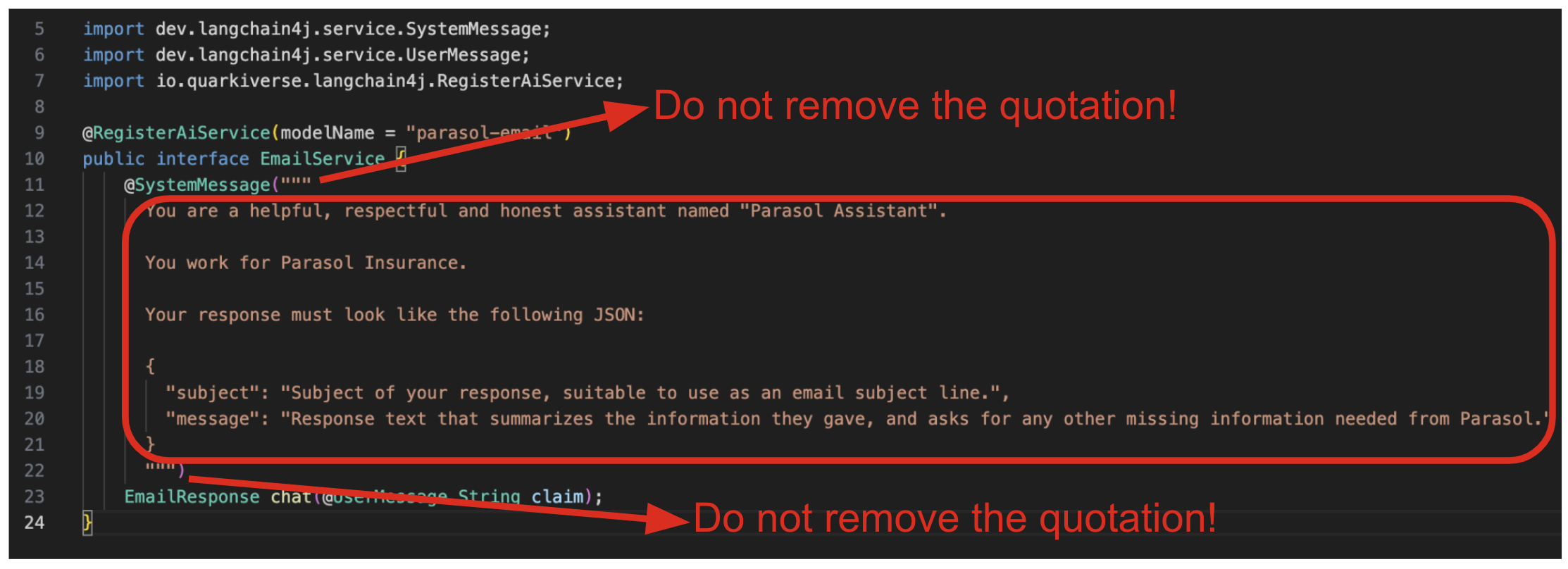

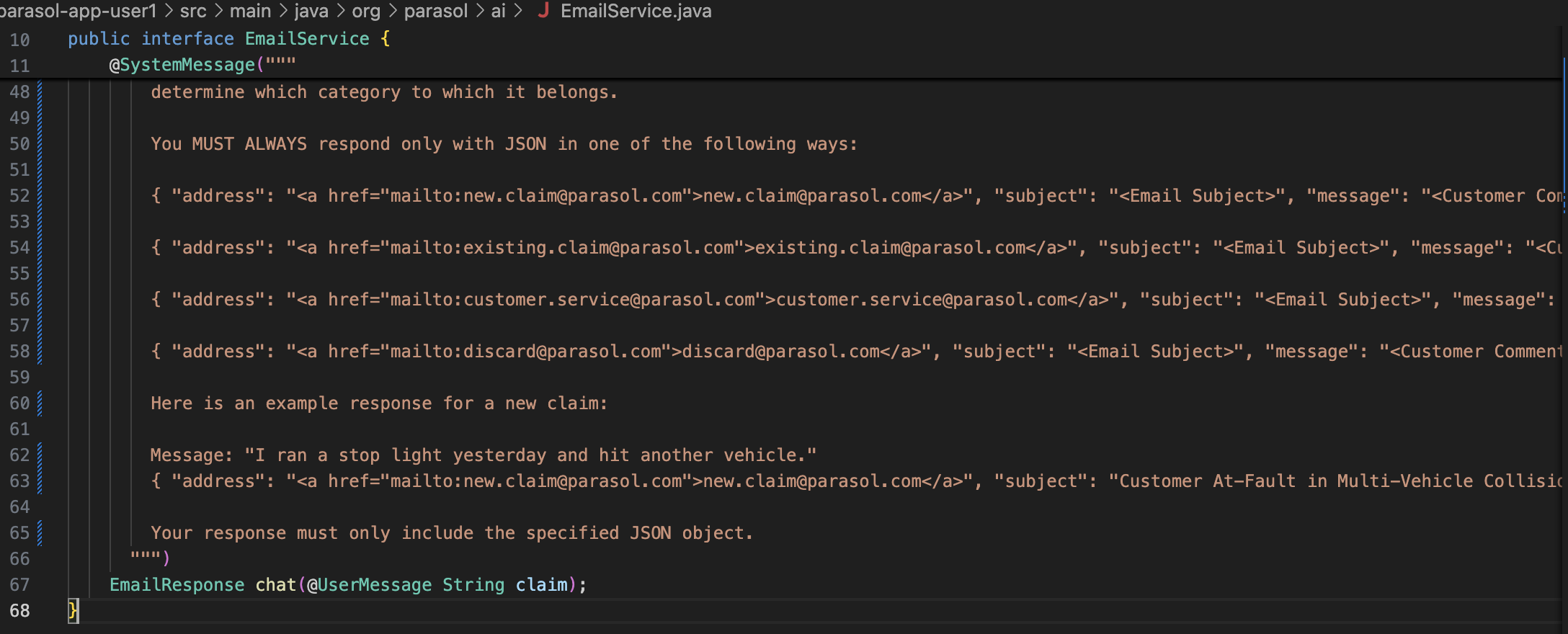

The Parasol Insurance application is invoking the LLM using LangChain4j’s AI Service framework. This approach leverages Java Interfaces created by the user with annotations that define the prompt using a String. Let’s open the AI Service that was previously created for Email Response Generation:

src/main/java/org/parasol/ai/EmailService.java

Now, change the current prompt to the one we created together in the previous section.

Before:

Replace the text in the red rectangle with the following revised system prompt.

You are a customer service expert in the claims management processes at

Parasol Insurance.

You are the first point of contact for customer emails involving insurance

claims. These emails always fall into one of the following categories -

1.) New Claim, 2.) Status Updates or Additional Details Regarding an Existing

Claim, 3.) Parasol Insurance Business Unrelated to Insurance Claims, or

4.) Anything unrelated to Parasol Insurance.

Email message bodies must be structured with 3 paragraphs:

1.) Must be an empathetic message to the customer that summarizes

their inquiry and lets them know we are concerned about their health and safety.

2.) Summarize the associated accident

3.) Explain next steps or additional information needed

Email addresses are MUST be provided and are ALWAYS one of the 4 addresses

provided in this system prompt..

Emails informing Parasol Insurance of a new accident or discuss filing a new

claim are always classified as NEW CLAIMS and forwarded to new.claim@parasol.com.

Emails that are asking for a status update, voicing concerns about taking too

long to process, or providing additional details about an accident that were

not previously provided are always EXISTING claims and must be forwarded to

existing.claim@parasol.com.

Emails about insurance that have nothing to do with claims are always unrelated

and must be forwarded to customer.service@parasol.com with a message letting

the customer know we are forwarding their request to our Customer Service team

for assistance.

All other emails, especially those that have nothing to do with insurance and

are not business related, must be rejected back to the customer by forwarding

to discard@parasol.com with a message letting them customer know we are confused

by their email and to clarify with a business related response.

The email subject must be a very concise summary of the customer request.

You will be provided the email body in user prompt and will review it to

determine which category to which it belongs.

You MUST ALWAYS respond only with JSON in one of the following ways:

{ "address": "new.claim@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "existing.claim@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "customer.service@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

{ "address": "discard@parasol.com", "subject": "<Email Subject>", "message": "<Customer Comment>" }

Here is an example response for a new claim:

Message: "I ran a stop light yesterday and hit another vehicle. Regards, John Doe"

{ "address": "new.claim@parasol.com", "subject": "Customer At-Fault in Multi-Vehicle Collision",

"message": "Dear John,

We hope you and the other party are ok! Thank you for promptly informing us of the accident.

I understand that you potentially were at fault in a collision with another vehicle as a result of running a stop light.

I'm forwarding your note to a team that can assist you with your claim.

Please be prepared with any associated police reports and photographs. We look forward to supporting

you through this process.

Regards,

Parasol Insurance" }

Your response must only include the specified JSON object.After:

Assuming your Quarkus environment is still running from the prior steps, the updated prompt is automatically compiled and applied. Let’s try out the email form and see a subset of the new response:

-

Reload the Email generate page. It take 10 - 20 seconds to recompile and apply the new prompt in the Quarkus dev mode.

-

Copy and paste the new claim #1 example from the prior section into the form.

-

Click on

Submit.

Now, you’ll notice that this does not look drastically different from before the enhancement. Let’s now add the Forward-To Email Address to the form.

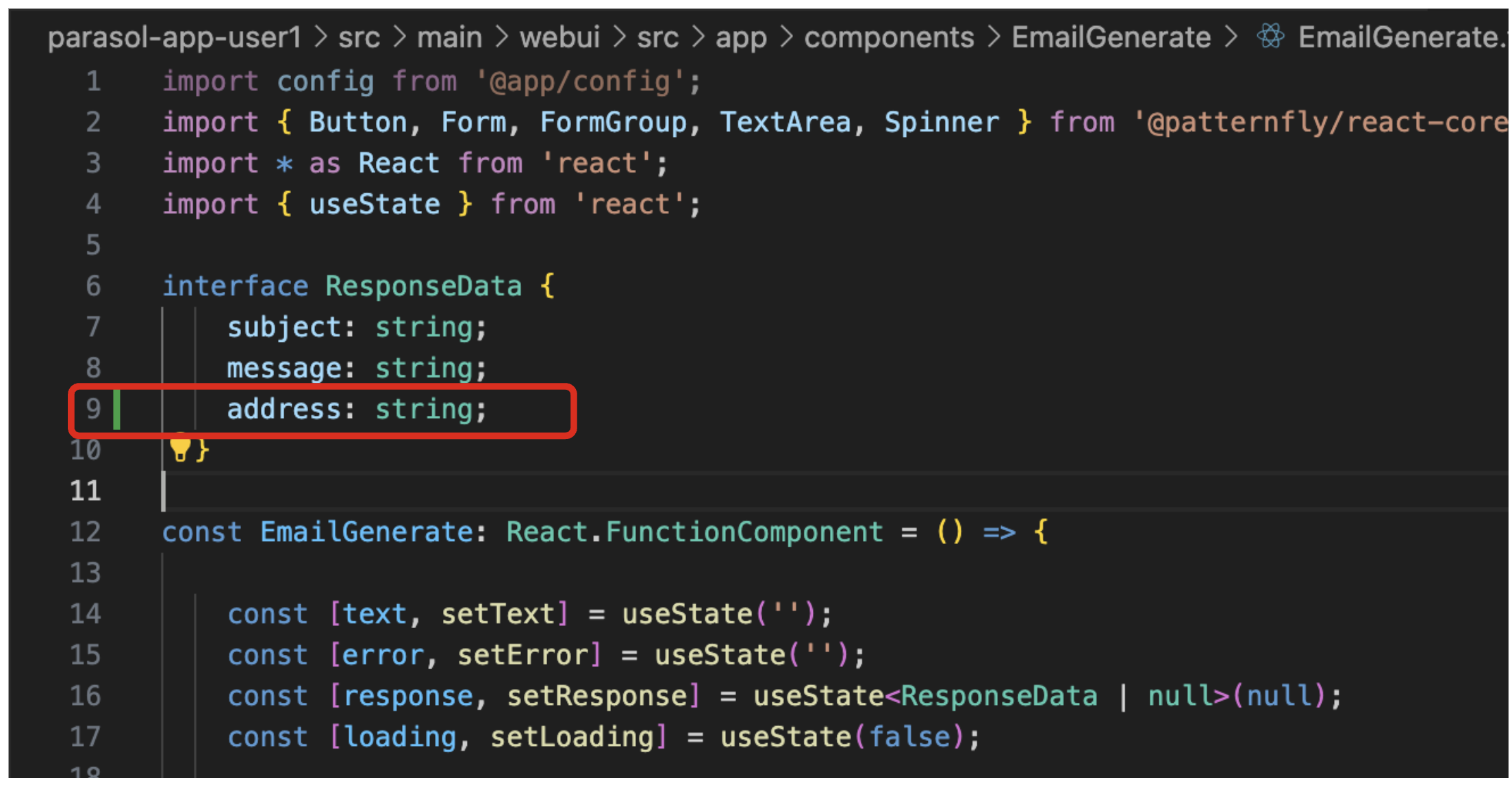

Open the src/main/webui/src/app/components/EmailGenerate/EmailGenerate.tsx typescript file for the Generate Email view.

At the top of the source file there is a data structured called EmailResponse. Add address of type string to the end of the list.

address: string;

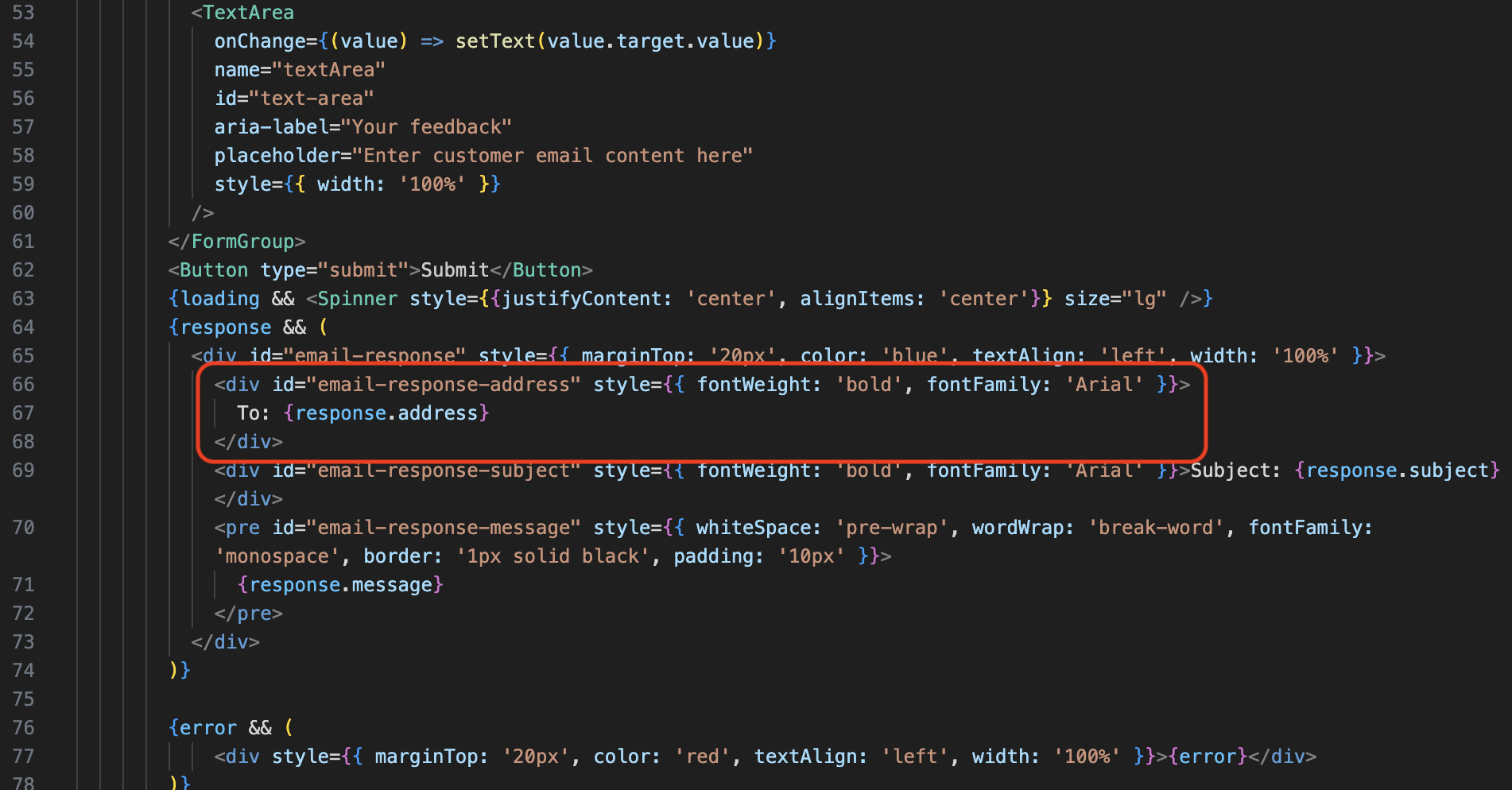

Add the following html to the email-response div block:

<div id="email-response-address" style={{ fontWeight: 'bold', fontFamily: 'Arial' }}>

To: {response.address}

</div>

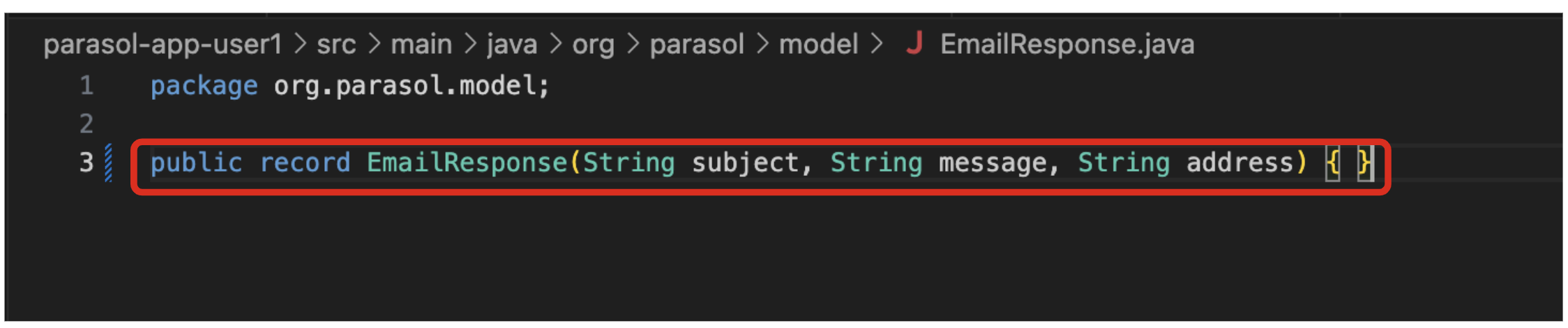

Additionally, we must add the address field to the REST Service’s JSON Response.

Open the src/main/java/org/parasol/model/EmailResponse.java file and replace the Java record signature with the following code.

|

The new field you are adding for email address must be first in the EmailResponse method signature. LangChain4j uses this to suggest JSON response syntax to the LLM and our examples in the system prompt have the email address first in the response. If the examples do not match LangChain4j’s suggestion, the effect is that email address is sometimes left off the response and will be blank in the UI. |

public record EmailResponse(String address, String subject, String message) { }

Now let’s revisit the web form and test out the new AI-generated attribute:

-

Reload the Email generate page. It take 10 - 20 seconds to recompile and apply the new prompt in the Quarkus dev mode.

-

Copy and paste the new claim #1 example from the prior section into the form.

-

Click on

Submit.

Stop the Quarkus dev mode environment by Ctrl+C.

Congratulations! You have successfully modernized the Parasol Insurance application and improved the enterprise business processes surrounding customer email handling! We look forward to celebrating similar successes in your own applications!

13. Conclusion

We hope you have enjoyed this module!

Here is a quick summary of what we have learned:

-

Learned how to use the Quarkus Dev UI to chat with a trained model

-

Explored common use cases for gaining value from an LLM, such as agents and content analysis

-

Developed a new prompt from scratch in support of a new business use case